SPECvirt ® Datacenter 2021 User Guide¶

1.0 Introduction¶

As virtualized environments become increasingly complex, it becomes more difficult to measure the performance of the overall ecosystem. Few tools exist to help in understanding the demands of provisioning of new resources, balancing workloads across hosts, and measuring the overall capacity of each host in a cluster. The SPECvirt ® Datacenter 2021 benchmark measures all of these aspects with particular attention to the hypervisor management layer.

This guide describes how to install, configure, and run the SPECvirt Datacenter 2021 benchmark.

1.1 Workloads¶

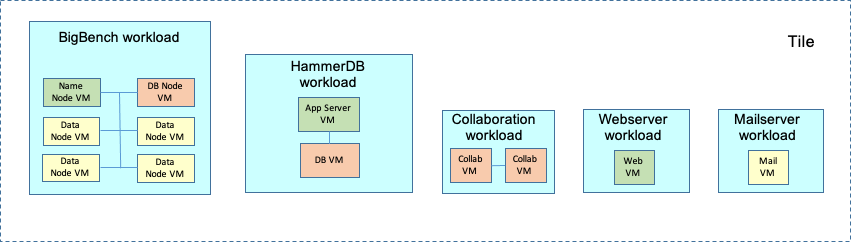

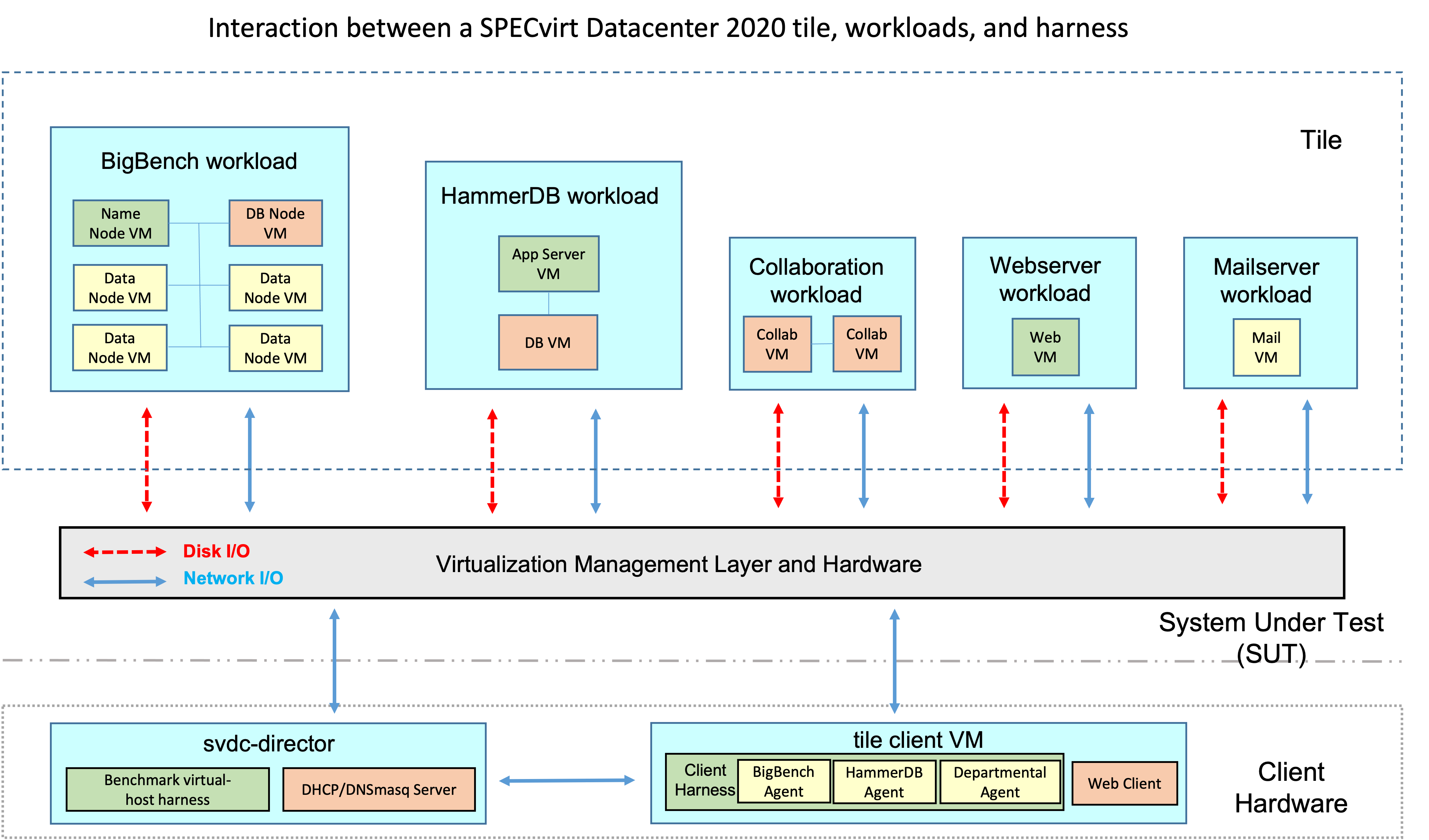

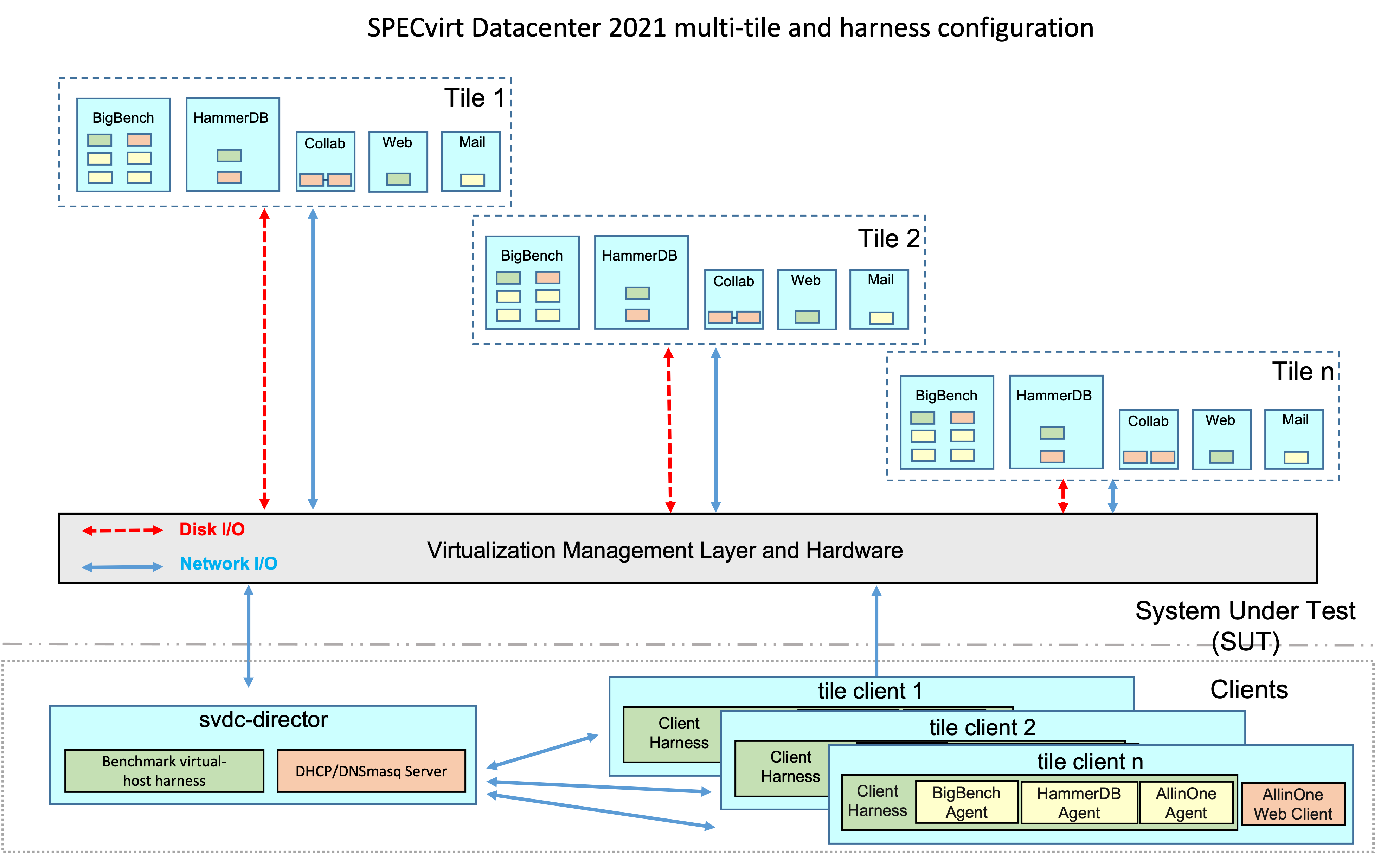

The benchmark consists of five workloads:

Three departmental workloads are derived from measurements of the resource utilization of actual VMs run a pre-defined workload:

Mail

Web

Collaboration

HammerDB’s OLTP workload simulates multiple virtual users querying against and writing to a relational database (for example, an online auction).

A modified version of the BigBench big data benchmark uses an Apache/Hadoop environment to execute complex database queries.

These five workloads comprise a unit of work called a tile.

To emulate typical datacenter network use, all VMs use a network to communicate to and from the clients and controller in the testbed. Any communication outside the SUT must be over a separate physical network.

Adding tiles allows for scaling out and reaching system saturation.

See more information about the workloads in the SPECvirt Datacenter 2021 Design Overview.

1.2 Run and Reporting Rules¶

In addition to the guidelines presented in this guide, you must follow specific run and reporting rules for a measurement to be considered compliant and for the results of that measurement to be publishable. You can find these rules in the SPECvirt Datacenter 2021 Run and Reporting Rules. Note that SPEC occasionally updates the run and reporting rules, so confirm that you have the latest available version before proceeding to submit a measurement for publication.

1.3 OVA distribution¶

The benchmark distribution is by OVF (Open Virtualization Format) template. The OVA is a package containing a compressed directory of a VM’s components with pre-configured and pre-installed applications. The OVA template contains the benchmark components, applications, and configuration settings required to run a measurement and generate a compliant SPECvirt submission. Once you deploy the OVA, you quickly can begin populating the testbed with the benchmark VMs, namely the benchmark controller (svdc-director), the workload driver clients, and the workload VMs.

2.0 Requirements¶

This guide assumes that you have familiarity with virtualization concepts and implementations for Red Hat® Virtualization (RHV) and/or VMware vSphere®. Successfully running the benchmark requires experience with:

Installing, configuring, managing, and tuning your selected hypervisor platform

Using your virtualization platform to create, administer, and modify virtual machines

Understanding and running shell scripts

Editing files with a text editor such as vi, vim, or nano

Allocating system resources to clusters and VMs including:

Setting up and adding physical and virtual networks

Setting up shared storage pools / datastores using the hypervisor’s management server

The benchmark provides toolsets for the following tested hypervisors:

VMware vSphere ® 6.7 and 7.0

Red Hat Virtualization ® (RHV) 4.3

Future releases of the benchmark may include support for updated and additional hypervisors and associated SDKs. See Appendix A of the SPECvirt Datacenter 2021 Run and Reporting Rules for details on adding a toolset for a particular hypervisor platform and its corresponding SDKs.

2.1 SUT hardware requirements¶

The SUT must contain a multiple of four physically identical hosts. That is, valid configurations are SUTs with four hosts, eight hosts, 12 hosts, and so on. The SUT’s management server must manage a homogeneous cluster of hosts and its resources. See Section 4 in the SPECvirt Datacenter 2021 Run and Reporting Rules for details regarding SUT requirements.

WARNING: A heterogeneous cluster results in a non-compliant configuration. That is, you must configure all hardware and software identically.

2.1.1 CPU, memory, and storage requirements¶

A SPECvirt Datacenter 2021 tile contains twelve workload VMs. Your system needs sufficient resources to support the workload VM requirements as defined in the following table:

VM type |

VM Count |

vCPUs per VM |

Memory (GB) |

Disk (GB) |

|---|---|---|---|---|

Departmental |

||||

|

1 |

4 ! |

8 |

12 + 10 for data |

|

1 |

4 ! |

8 |

12 + 20 for data |

|

2 |

4 ! |

8 |

12 + 10 for data |

HammerDB database server |

1 |

8 ! |

24 |

12 + 50 for data |

HammerDB appserver |

1 |

2 ! |

8 |

12 |

BigBench data nodes |

4 |

8 ! |

24 |

12 + 60 for data |

BigBench name node |

1 |

8 ! |

8 |

12 |

BigBench database node |

1 |

8 ! |

4 |

12 |

Totals per tile |

12 |

74 ! |

172 GB |

484 GB |

Requirements per workload |

Memory (GB) |

Disk (GB) |

|---|---|---|

8 |

22 |

|

Web |

8 |

32 |

Collaboration |

16 |

44 |

HammerDB |

32 |

74 |

BigBench |

108 |

312 |

! The disk totals are pre-allocated disk sizes for the VMs and do not take into account any potential additional storage required for hypervisor functions such as swap space.

! vCPU allocations in the table above are the default values, though you can configure the number of vCPUs per VM as described in Section 3.4.

WARNING: The memory and storage allocations for the workload VMs are fixed.

WARNING: All SUT storage pools must be shared and available to all hosts in the SUT. See Section 4.4.4.1 of the SPECvirt Datacenter 2021 Run and Reporting Rules for storage configuration requirements.

2.1.2 Network requirements¶

Each tile uses approximately 1.2 Gb/sec between the client and SUT. Note however there is additional intra-host and inter-host network traffic between VMs of a given workload (the collaboration server VMs, the BigBench VMs, and the HammerDB VMs) that increase SUT network utilization.

The cluster requires a 10 GbE or greater network for the SUT and client traffic. It is highly recommended that this network be dedicated to the SUT and client hosts. The svdc-director VM’s DHCP and dnsmasq service responds only to requests from the pre-assigned MAC addresses on the workload VMs’ vNIC (eth0). This ensures that the svdc-director VM does not conflict with public DHCP and dnsmasq servers.

You may create additional networks for storage or other hypervisor-specific needs (for example, adding a dedicated network for networked storage or for VM migrations).

2.2 Client hardware requirements¶

Each tile requires its own client. The client is not part of the tile, cannot run on a SUT host, and has at least the following properties:

Four vCPUs

12 GB memory

12 GB disk

These are the minimum required values for the client VMs, and you can make changes to their resource allocations. That is, you can add vCPUs, memory, or data disk space if you desire.

Note: Each client can have one virtual NIC only.

2.3 Management server requirements¶

The hypervisor’s management server (for example, VMware vCenter and Red Hat Virtualization’s RHV-M) can be run in a VM or on a physical server. The svdc-director VM must be able to access the management server. See the hypervisor documentation for details on its resource requirements.

2.4 svdc-director VM requirements¶

The benchmark uses a VM named svdc-director to act as its benchmark controller. The svdc-director VM contains the harness and configuration files and executes and controls the benchmark and consumes at least the following resources:

Eight vCPUs

24 GB memory

12 GB disk for OS + 75 GB disk for data

To access the cluster’s management server and ease installation and ongoing testbed management, you may add a vNIC on the public network to the svdc-director VM only.

WARNING: You may not add a vNIC to any other VMs, the template, or the clients. If you add a public vNIC to the svdc-director VM (for example eth1), ensure the vNIC is on a virtual switch connected to the public network only.

3.0 Prepare the infrastructure¶

3.1 Configure the datacenter infrastructure¶

After you install the hypervisor on the SUT hardware, use the hypervisor’s management server to set up the datacenter and SUT, configure and assign shared storage to it, and configure the network(s).

3.1.1 Enable hypervisor SSH access¶

The benchmark requires SSH and SCP access to the SUT hosts for collecting configuration information at the end of a compliant measurement. If your hypervisor restricts SSH and shell access, enable them.

3.1.2 Configure management server¶

Install the hypervisor management software either on a physical host or as a VM according to your hypervisor documentation. If the management server is a VM, it can reside on any host in the testbed.

3.2 Deploy the template to create the svdc-director VM¶

The following sections detail how to:

Download the template

Import the template

Clone it to the svdc-director VM and configure its infrastructure services

Configure the harness testbed and set parameters

Run the director VM configuration script (makeme_svdc-director.sh)

Set up the GNOME Desktop for LiveView (optional, recommended)

Generate the Management Server Certificate if needed

Synchronize timekeeping across the testbed

3.2.1 Download the template from SPEC¶

All VMs are cloned from the svdc-template OVA distributed as the benchmark kit. The harness provides scripts that clone the template to create the svdc-director VM, client, and all workload VMs then configure those VMs for their roles.

Download the latest OVA template as provided with your license from the SPEC web site to a local server. This OVA contains a VM derived from the source code for the CentOS distribution 7.6 release. It also contains the pre-requisite application software, the hypervisor-specific SDKs, and the pre-installed and configured benchmark harness.

The CentOS Project does not endorse the benchmark.

3.2.2 Deploy/Import the template¶

The svdc-template is assigned the following resources:

Four vCPUs

4 GB memory

12 GB disk

The OS virtual disk policy for the template is thin provisioned. Read your hypervisor documentation for how to change this if you desire. In some environments, you might not be able to change the OS virtual disk policy.

The following sections describe how to import the template to the SUT and client environments.

If the SUT and client cluster do not share storage pools, you need to deploy the svdc-template on both the SUT and client clusters.

vSphere¶

Copy the template’s OVA file to a local directory.

Log into vCenter. Click on

Actions → Deploy OVF Template → Local file → Browse. Go to the folder with the.ovafile and select it. Click Open then Next.Under Select a compute resource, select the datacenter then choose the SUT or client cluster to deploy to. If your target cluster has DRS (Dynamic Resource Scheduler) disabled, you need to specify a host name.

Review details, and select Next.

Under Select storage, the virtual machine virtual disk format defaults to

Thick Provision Lazy Zeroed. Read your hypervisor documentation for how and why to change this if you desire.Select target datastore, and select Next.

Select the cluster network, and select Next.

Confirm settings are correct and Finish.

Set template MAC address:

Right click on svdc-template and select Edit Settings.

Click on Network Adapter to select your private SUT network. Under MAC address, change

AutomatictoManual. Set the first three digits to the value you use for$MACAddressPrefixin Control.config (default is42:44:49:). The last three digits default to00:00:00. Click OK.

Convert to template:

Create a template VM named svdc-template from the deployed svdc-template VM.

Right click on svdc-template.

Choose

Template → Convert to template.

RHV¶

Copy the template OVA file to a directory (for example /files/image-dir) on one of the SUT hosts.

Log in to the Red Hat Virtualization Manager webadmin interface:

https://{RHV Manager fqdn}/ovirt-engine/webadmin

Navigate to

Compute -> Virtual Machines.Click on Other Options icon (three vertical dots in upper right corner of page) and select

Import.In the Import Virtual Machine(s) window:

Select the Data Center containing the SUT host where the OVA was saved.

Select

Virtual Appliance (OVA)as theSource.Select the SUT host where the OVA was saved under the

Host.In the

File Pathfield, provide the directory where the OVA file resides on the SUT host (for example /files/image-dir).

Click the Load button. The template OVA name shows up in the

Virtual Machines on Sourcesection.Click the box next to the template name to select it and then click the right-pointing arrow icon. The template name is displayed the

Virtual Machines to Importsection.Click the Next button at the bottom of the Import Virtual Machine(s) window.

In the new Import Virtual Machine(s) window, select the following options:

Select the desired

Storage Domain.Select the desired

Target Cluster.Do not select the

Attach VirtIO-Driverscheck box, as that attempts to load additional RPMs on the template.The Allocation Policy defaults to

Thin Provision. Read your hypervisor documentation for how and why to change this if you desire.Select the check box under

Clone.In the

Generaltab, verify theNamematches the desired name of the template (for example svdc-template).Click on the

Network Interfacesand select the desiredNetwork NameandProfile Name.

Confirm settings and click OK. The selected cluster uploads the template OVA as a VM.

Enable VirtIO-SCSI for virtual disks:

Once the new VM has been created, highlight the VM entry in the

Compute -> Virtual Machineswindow and click the Edit button.If not already visible, click on the

Show Advanced Optionsbutton at the bottom left of the window.In the

Resource Allocationsection, select the check box next toVirtIO-SCSI Enabled, and click OK.Select the

Disksmenu option and click the Edit button in the upper right corner of the window.In the Interface field, select

VirtIO-SCSIand click OK.

Set template MAC address:

Click on the

Network Interfacesmenu item. You should see a single NIC entry in the table.Click on the Edit button in the upper right corner.

In the

Edit Network Interfacewindow, select the check mark next toCustom MAC address. Set the first three digits to the value you use for$MACAddressPrefixin Control.config (default is42:44:49:). The last three digits default to00:00:00. If you plan to use a MAC address pool other than the default, change the first three numbers to match the target MAC address pool.Click OK.

Convert to template:

From the

Compute -> Virtual Machineswindow, highlight the svdc-template VM.Click on Other Options icon (three vertical dots in upper right corner of page) and select

Make Template.In the

New Templatefield, provide a name for the template (for example svdc-template). The template name and the source VM name can be the same.Click OK to create the template. You can confirm this by going to the

Compute -> Templateswindow.

3.2.3 Download the CentOS distribution¶

Download the CentOS 7.6 Build 1810 ISO (CentOS-7-x86_64-DVD-1810.iso) from the CentOS site to local storage. Upload this ISO file to a datastore, content library, or ISO storage pool in the datacenter.

3.2.4 Create the svdc-director VM¶

The svdc-director VM provides an NTP server, DNS server, and DHCP server. It utilizes the MAC address to assign VM names, IP addresses, and NTP settings to the workload VMs during deployment. See the SPECvirt Datacenter 2021 Design Overview for details on the IP and VM naming assignment scheme as well as the infrastructure services that the svdc-director VM provides.

Next, deploy the svdc-director VM on the client cluster. Use the following steps to clone the template. These steps provide the minimum required values for the svdc-director VM, and you can make changes to their resource allocations. That is, you can add vCPUs, memory, or data disk space if you desire.

vSphere¶

Right click on svdc-template.

Choose either

New VM from this templateorClone, then chooseClone to Virtual Machine.Set new VM name to svdc-director.

Select the datacenter, storage pool, and the SUT or client cluster to deploy to. If your target cluster has DRS (Dynamic Resource Scheduler) disabled, you need to specify a host name.

Check the boxes for

Customize this VM's hardwareandPower on VM after creationthen Next.

Change vCPUs to 8.

Change Memory to 24 GB.

Click on Network Adapter to select your private SUT network. Under MAC address, change

AutomatictoManual. Set the first three digits to the value you use for$MACAddressPrefixin Control.config (default is42:44:49:). Set the last three digits to00:00:02.Click

ADD NEW DEVICEand select CD/DVD Drive. Map this to the CentOS distribution ISO that you uploaded to a datastore, content library, or ISO library and check the box to Connect it to the VM.If the management server runs on a public network, add a second virtual NIC to access it for hypervisor and VM management operations. Click

ADD NEW DEVICEand selectNetwork Adapter. Select the management server network from the New network’s pull down menu, and check the box to Connect it to the VM.Confirm settings then click Finish.

Power on the svdc-director VM.

Use the management server to start a VM remote console window, and log in with username root and password linux99.

RHV¶

From the

Compute -> Templateswindow, select svdc-template.Click the

New VMbutton.In the

New Virtual Machinewindow:

Under the

Generalsection, type svdc-director in theNamefield.Under the

Systemsection, change theMemory Sizevalue to be 24576 MB. Note, if you type in 24 GB, it converts the value to the desired MB value.Under the

Systemsection, change theTotal Virtual CPUsvalue to 8.Click OK to create the svdc-director VM.

Once new VM is created, from the

Compute -> Templateswindow, click on svdc-director and:

Select the Network Interfaces tab.

Highlight the nic1 interface and then click the

Editbutton.Check the

Custom MAC addressbox. Set the first three digits to the value you use for$MACAddressPrefixin Control.config (default is42:44:49:). Set the last three digits to00:00:02.Click the

OKbutton.Power on the svdc-director VM.

Use the management server to start a VM remote console window, and log in with username root and password linux99.

3.2.5 Configure the svdc-director VM and harness¶

The following section discusses the steps required to configure the svdc-director VM.

3.2.5.1 Set up access to the management network¶

If you need to add a virtual NIC to access the management server network, configure it to start at system boot:

Edit

/etc/sysconfig/network-scripts/ifcfg-eth1and set ONBOOT=yes. You can assign a static IP address if desired.Save the file and start the network on eth1.

Ensure you can access the management server network before proceeding.

The firewalls within the VMs are disabled, and SSH is already configured to allow password-less ssh between the VMs. All VMs in the SPECvirt Datacenter 2021 environment use the following credentials:

username = root

password = linux99

3.2.5.2 Use directory aliases¶

The benchmark provides some pre-defined aliases to help with navigation.

Alias |

Path |

|---|---|

$CP_HOME |

/export/home/cp |

$CP_BIN |

/export/home/cp/bin |

$CP_COMMON |

/export/home/cp/config/workloads/specvirt/HV_Operations/common |

$CP_LOG |

/export/home/cp/log |

$CP_RESULTS |

/export/home/cp/log/results/specvirt |

$CP_VSPHERE |

/export/home/cp/config/workloads/specvirt/HV_Operations/vSphere |

$CP_RHV |

/export/home/cp/config/workloads/specvirt/HV_Operations/RHV |

For example, to edit Control.config, you can use the alias $CP_BIN:

vi $CP_BIN/Control.config

3.2.5.3 Edit the Control.config file¶

The $CP_BIN/Control.config file contains the options you set for a measurement and defines the testbed environment settings as well as run-to-run settings.

Edit the configuration file Control.config to set variables for your testbed:

vi $CP_BIN/Control.config

You must set at least the following required values to configure the svdc-director VM and testbed environment before configuring the svdc-director VM:

Control.config parameter |

Description |

|---|---|

virtVendor |

vSphere or RHV |

mgmtServerIP |

IP address of management server |

mgmtServerHostname |

Hostname of management server |

mgmtServerURL |

URL of management server. For example for RHV mgmtServerURL = https://myserver-rhvm/ovirt-engine/api and for vSphere mgmtServerURL = https://myserver-vsphere/sdk |

virtUser |

Management server administrator name |

virtPassword |

Management server password |

virtCert |

Management server certificate if needed |

offlineHost_1 |

Name of host to put in maintenance mode at start of measurement. Use the name you assigned to the host when you created the cluster as it appears in your management server. |

templateName |

Template name for workloads VMs |

clientTemplateName |

Template name for client VMs if storage pool is not shared across client and SUT hosts |

cluster |

SUT cluster name |

network |

SUT network name |

clientCluster |

Client cluster name |

clientNetwork |

Client network name |

numHosts |

Number of SUT Hosts (multiple of four) |

mailStoragePool[0] |

Shared storage location for mailserver VMs |

webStoragePool[0] |

Shared storage location for webserver VMs |

collabStoragePool[0] |

Shared storage location for collaboration server VMs |

HDBStoragePool[0] |

Shared storage location for HammerDB VMs |

BBStoragePool[0] |

Shared storage location for BigBench VMs |

clientStoragePool[0] |

Storage location for client VMs |

vmDataDiskDevice |

Guest VM data disk device name (sdb or vdb) |

MACAddressPrefix |

Defaults to 42:44:49 |

IPAddressPrefix |

Defaults to 172.23 |

The parameters above are the minimum parameters you need to set before configuring the svdc-director VM. The entire contents of Control.config are in Appendix.

3.2.5.4 Define SUT and/or client host aliases¶

The svdc-director VM shares SSH keys with the workload VMs to allow password-less login between all VMs. If you intend to submit results for publication, you need to enable password-less login from the svdc-director VM to the SUT hosts.

Edit $CP_BIN/hosts.txt to add SUT host and client host IP addresses and aliases. For example, to add four SUT hosts with the IP address range of 122.23.0.11 to 122.23.0.14, add to $CP_BIN/hosts.txt:

12.22.0.11 myhost11.company.com myhost11 sutHost1

12.22.0.12 myhost12.company.com myhost12 sutHost2

12.22.0.13 myhost13.company.com myhost13 sutHost3

12.22.0.14 myhost14.company.com myhost14 sutHost4 offline_host1

Optionally you can add aliases for the client hosts:

12.22.0.21 myclient21.company.com myclient21 clientHost1

12.22.0.22 myclient22.company.com myclient22 clientHost2

During svdc-director VM configuration and during each measurement preparation, the harness writes these values into the svdc-director VM’s /etc/hosts file.

3.2.5.5 Generate management server certificate as needed¶

If your hypervisor requires one, generate a certificate and set its value in Control.config. For example, on RHV issue the following command:

curl -k 'https://{mgmt-server-fqdn}/ovirt-engine/services/pki-resource?resource=ca-certificate&format=X509-PEM-CA' -o /export/home/cp/config/workloads/specvirt/HV_Operations/RHV/pki-resource.cer

We recommend you place this file in /export/home/cp/config/workloads/specvirt/HV_Operations/RHV and set the value of the virtCert parameter in Control.config to the full directory path of this file. For example:

virtCert = /export/home/cp/config/workloads/specvirt/HV_Operations/RHV/pki-resource.cer

3.2.5.6 Run the “makeme” svdc-director VM script¶

After you edit the Control.config and hosts.txt files, the /root/makeme_svdc-director.sh script configures the VM into the svdc-director VM. The makeme script configures the svdc-director VM networking and infrastructure services needed to run the benchmark:

cd /root

./makeme_svdc-director.sh

Confirm the settings that the script reads from Control.config. Allow the script to reboot the VM or reboot it manually.

This script:

Configures the svdc-director VM hostname, IP address, and dnsmasq server for DNS and DHCP

Adds a 75 GB virtual disk and mounts it to /datastore

Optionally sets up password-less login to the SUT and client hosts

After the svdc-director VM restarts, start a remote console window and log in with username root and password linux99.

3.2.7 Set up the GNOME Desktop for LiveView (recommended)¶

You can start and stop measurements either through the command line or through the LiveView GUI. The optional LiveView GUI requires GNOME Desktop, and the svdc-director VM includes a script to configure this. After you run the makeme_svdc-director.sh script to configure the svdc-director VM environment:

Log into the svdc-director VM, and verify that the IP address is correct.

Run setup_GnomeDesktop.sh:

cd /root ./setup_GnomeDesktop.sh

This script:

Installs GNOME Desktop group package

Sets the run level to start the CentOS GUI at boot time

Asks for the VNC password and sets up VNC to allow remote connections to session :1

Asks to reboot the VM

Allow the VM to reboot. After the VM reboots, the CentOS GUI is displayed.

Log into a console window or VNC session :1 with username root and password linux99

Accept the license and answer the questions with defaults when you log into the GNOME GUI.

3.2.8 Configure time synchronization¶

The svdc-director VM provides an isolated NTP server, and the workload VMs synchronize automatically to it. Set all hosts and the management server to synchronize time to the svdc-director VM.

3.3 Create the client VMs¶

WARNING: The client driver VMs must run on host(s) or cluster separate from the SUT. See the SPECvirt Datacenter 2021 Run and Reporting Rules for details.

Each tile requires a client. You can deploy clients to a client cluster or to an individual client host and its local storage.

3.3.1 Deploy a client using settings in Control.config¶

To create clients automatically when using a client cluster and shared storage, you can use DeployClientTiles.sh. It uses the value of numTilesPhase1 to 1 and numTilesPhase3 to the total number of clients to create.

Set the value of clientStoragePool[0] to your target storage pool.

Set the value of clientCluster to your target client cluster.

Issue the commands:

cd $CP_COMMON ./DeployClientTiles.sh

3.3.2 Deploy a client to host and/or local host storage pool¶

You can use DeployClientTiles-custom.sh to deploy additional clients, to deploy to a specific client host instead of a cluster, and to deploy to a client host’s local storage pool:

./DeployClientTiles-custom.sh <startTile> <endTile> [clientHost] [clientStoragePool]

For example, to add clients eight, nine, and ten to the client cluster and storage pool in Control.config:

./DeployClientTiles-custom.sh 8 10

To create two client VMs on a client host named host21 with a local, non-shared storage pool named LUN5:

./DeployClientTiles-custom.sh 1 2 host21 LUN5

3.4 Provision tiles¶

This section describes how to create tiles and add tiles as needed.

3.4.1 Assign storage pools¶

The benchmark deploys the workload VMs to shared storage pools (also called datastores, mount points, or LUNs). These storage pools are shared among the hosts in the SUT, and you assign the same or different storage pools to each workload using the following Control.config parameters.

Control.config parameter |

Workload storage location |

|---|---|

mailStoragePool[0] |

Mailserver VMs |

webStoragePool[0] |

Webserver VMs |

collabStoragePool[0] |

Collaboration server VMs |

HDBStoragePool[0] |

HammerDB VMs |

BBStoragePool[0] |

BigBench VMs |

You can use multiple storage pools for a given workload type. Assign multiple lines with a single storage pool per line, and increment the number in brackets. For example:

mailStoragePool[0] = mailPool1

mailStoragePool[1] = mailPool2

mailStoragePool[2] = mailPool3

The deploy scripts evenly place VMs of the same workload type across all defined storage pools for that workload based on the tile number.

3.4.2 Assign vCPUs to workload VMs¶

The only system resource you can change on any of the workload VMs is the vCPU allocations. To adjust the number of vCPUs from the default, in Control.config you can change the values of:

|

Default |

VM type value |

|---|---|---|

vCpuAIO |

4 |

mailserver, webserver, collaboration servers (2) |

vCpuHapp |

2 |

HammerDB application server |

vCpuHdb |

8 |

HammerDB database server |

vCpuBBnn |

8 |

BigBench name node |

vCpuBBdn |

8 |

BigBench data nodes (4) |

vCpuBBdb |

8 |

BigBench database server |

3.4.3 Use workload VM deployment scripts¶

The VM deployment and VM management scripts reside under $CP_COMMON. These scripts call hypervisor-specific management scripts which perform functions such as:

Deploy a VM

Power on or off a VM

Delete a VM

Enter or exit host maintenance mode

The hypervisor-specific scripts reside in:

/export/home/cp/config/workloads/specvirt/HV_Operations/$virtVendor

Note: For the two supplied $virtVendor toolkits, you can use the following aliases: $CP_VSPHERE and $CP_RHV.

Running prepSUT.sh creates all the required workload VMs for all tiles specified in the Control.config parameter numTilesPhase3 in parallel. Running prepSUT.sh serial creates all the required workload VMs for all tiles specified in the Control.config parameter numTilesPhase3 serially. Deploying in parallel saves time but might overload the system. If you find problems with a parallel deployment, deploy serially which takes longer but creates less load on the system.

Note that the departmental VMs are deployed only at runtime.

From the root account on the svdc-director VM:

cd $CP_COMMON

./prepSUT.sh or

./prepSUT.sh serial

This script:

Reads the testbed-specific values in $CP_BIN/Control.config (such as host and management server address and login information, testbed network name, and SUT name)

Powers off and deletes any workload VMs if they exist

Loads, creates, and creates a compressed backup of the HammerDB’s database on the svdc-director VM to /opt/hammerdb_mysql_backup/hammerDB-backup.tgz

Note: This can take a considerable amount of time during which the HammerDB VMs appear idle.

For each tile it also:

Deploys and powers on the tile’s six BigBench VMs, creates the Hadoop cluster, and loads its dataset

Deploys the tile’s two HammerDB VMs

Powers on the HammerDB database server VM and restores its database from the svdc-director VM

Powers off all workload VMs

It takes several minutes (possibly an hour or more depending on your configuration) to create the BigBench and HammerDB VMs.

To check the progress of tile creation, view $CP_COMMON/prepSUT.log:

Fri May 8 06:20:22 UTC 2020 Deleting BigBench VMs on Tile 1

Fri May 8 06:20:28 UTC 2020 Placing every 4th host in cluster into maintenance mode

numHostsToRemove= 1

Fri May 8 06:20:28 UTC 2020 removing offlineHost_1

Fri May 8 06:20:28 UTC 2020 calling ../$virtVendor/EnterMaintenanceMode.sh offlineHost_1

Fri May 8 06:20:29 UTC 2020 Deploying BigBench VMs for full Tile: 1

Checking if HammerDB backup exists

Fri May 8 06:21:29 UTC 2020 HammerDB Backup does not exist. Loading database....

Fri May 8 06:56:54 UTC 2020 Done Deploying BigBench VMs for Tile 1

Fri May 8 07:07:42 UTC 2020 HammerDB Database load and backup completed

Fri May 8 07:07:42 UTC 2020 Creating HammerDB VMs for Tile 1 (partialTile value=0)

Fri May 8 07:10:54 UTC 2020 Configuring HammerDB VM for tile 1

Fri May 8 07:13:05 UTC 2020 Copying HammerDB Backup to Hammer DB VM for tile 1

Fri May 8 07:14:22 UTC 2020 Done Deploying HammerDB VMs for Tile 1

Check this log frequently for the status of tile deployment. Ensure you see Done Deploying in this log file for all workloads across all tiles before proceeding.

To verify that all BigBench VMs are configured and ready, run:

grep "Done Deploying BigBench" prepSUT.log | wc

grep "Done Deploying HammerDB" prepSUT.log | wc

The SUT is ready to run a measurement when these values match numTilesPhase3 in Control.config.

Also check each tile’s deployment log for any errors during BigBench data generation. To check if deployment and BigBench data generation succeeded:

grep Succeeded $CP_LOG/deploy-tilexxx.log

Make sure you see the following for each tile’s log:

deploy-tile1.log:BB DataGen Succeeded svdc-t001-bbnn

deploy-tile2.log:BB DataGen Succeeded svdc-t002-bbnn

...

3.4.4 Add tiles with the prepSUT-addTile.sh script¶

You can manually redeploy or add a tile of workload VMs with the following script. Where the first additional tile to create is X and the last additional tile is Y:

$CP_COMMON/prepSUT-addTiles.sh X Y

To add a single tile:

$CP_COMMON/prepSUT-addTiles.sh X X

This script powers off all workload VMs before creating new tiles. Ensure you see Done Deploying in $CP_COMMON/prepSUT.log file for all workloads across all new tiles before proceeding.

After adding one or more tiles, edit $CP_BIN/Control.config to update the values for numTilesPhase1 and numTilesPhase3.

When you add a tile, remember to generate the client for it. See Section 3.3 for more information on creating clients.

3.4.5 Example tile¶

Using the assignment scheme detailed in the SPECvirt Datacenter 2021 Design Overview, the following table shows the default VM name, IP address assignments, and MAC addresses for tile one:

Tile one VM name |

VM type |

Tile one default IP address |

Tile one default MAC address |

|---|---|---|---|

svdc-t001-client |

client |

172.23.1.20 |

42:44:49:00:01:20 |

svdc-t001-mail |

departmental |

172.23.1.1 |

42:44:49:00:01:01 |

svdc-t001-web |

departmental |

172.23.1.2 |

42:44:49:00:01:02 |

svdc-t001-collab1 |

departmental |

172.23.1.3 |

42:44:49:00:01:03 |

svdc-t001-collab2 |

departmental |

172.23.1.4 |

42:44:49:00:01:04 |

svdc-t001-hdb |

HammerDB DBserver |

172.23.1.6 |

42:44:49:00:01:06 |

svdc-t001-happ |

HammerDB Appserver |

172.23.1.7 |

42:44:49:00:01:07 |

svdc-t001-bbnn |

BigBench name node |

172.23.1.8 |

42:44:49:00:01:08 |

svdc-t001-bbdn1 |

BigBench data node |

172.23.1.9 |

42:44:49:00:01:09 |

svdc-t001-bbdn2 |

BigBench data node |

172.23.1.10 |

42:44:49:00:01:10 |

svdc-t001-bbdn3 |

BigBench data node |

172.23.1.11 |

42:44:49:00:01:11 |

svdc-t001-bbdn4 |

BigBench data node |

172.23.1.12 |

42:44:49:00:01:12 |

svdc-t001-bbdb |

BigBench dbserver |

172.23.1.13 |

42:44:49:00:01:13 |

Workload VMs do not require a CD/DVD device or additional vNIC.

4.0 Run the benchmark¶

The following sections discuss the steps required to initialize and run the benchmark.

4.1 Edit the Testbed.config file¶

Edit the $CP_BIN/Testbed.config file to provide testbed-specific configuration information that describes the hardware and software used, hypervisor tunings applied, and any other details required to reproduce the environment.

4.2 Edit the Control.config file¶

Edit the $CP_BIN/Control.config file to define:

Offline host(s) (in maintenance mode at measurement start)

Number of tiles to run in each phase

Workload start delays (optional)

See the list of all Control.config parameters in Appendix.

4.2.1 Set SUT host pre-measurement states¶

During benchmark preparation and Phase 1, the harness sets 25% of the host(s) in maintenance mode. During Phase 2, the harness sets the offline host(s) to become online and available for load balancing and for additional workloads in Phase 3. You specify the host(s) to enter and exit maintenance mode with the Control.config parameter offlineHost_1 (and offlineHost_2 and so forth).

4.2.2 Set number of tiles and partial tiles¶

Set the number of tiles with the Control.config parameters numTilesPhase1 and numTilesPhase3:

numTilesPhase1 is the number of tiles to run at measurement start and during Phase 1

numTilesPhase3 is the total number of tiles to run during Phase 3 until the end of the measurement run

To achieve system saturation, the final tile may be a full tile or fractional tile. For full tiles, use the whole number only. That is, use “5” not “5.0”. For partial tiles, use increments of .2 to add workloads in the following order:

Fraction |

Workloads |

|---|---|

.2 |

|

.4 |

mail + web |

.6 |

mail + web + collaboration |

.8 |

mail + web + collaboration + HammerDB |

For example, numTilesPhase1 = 6.4 runs six full tiles plus a partial seventh tile containing only the mail and web workloads at measurement start. With numTilesPhase3 = 8.2, the harness then starts the BigBench workload on tile seven, starts an additional full tile eight, and deploys a mailserver on tile nine.

4.2.3 Set other configurable parameters (optional, compliant)¶

4.2.3.1 Set debug level¶

By default, the debug level is 1 and minimizes logging output. You can increase the debug level when troubleshooting to between one and nine. To use the highest debug level:

debugLevel = 9

4.3.2.4 Set user custom pre-run and post-run Scripts¶

The harness provides two user scripts in the hypervisor-specific directories:

userInit.sh

userExit.sh

The harness calls these scripts before and after running a measurement allowing you to perform additional pre- and post-measurement tasks. For example, you can use userInit.sh to collect vSphere performance data with esxtop then use userExit.sh to stop esxtop and copy the esxtop data files to the svdc-director VM for additional processing.

4.2.3.2 Change workload start delays and DelayFactors¶

To reduce the possibility of SUT overload during measurement start, you can set measurement and workload delays between starting each workload. The default settings for workload delays are:

mailDelay = 30

webDelay = 30

collab1Delay = 30

collab2Delay = 1

HDBDelay = 30

bigBenchDelay = 120

For example, if mailDelay = 30, then the web workload starts 30 seconds after the mail workload starts.

You can also change delay factors from their defaults to extend workload deployment delays on a per-tile basis:

mailDelayFactor = 1.0

webDelayFactor = 1.0

collab1DelayFactor = 1.0

collab2DelayFactor = 1.0

HDBDelayFactor = 1.0

bigBenchDelayFactor = 1.0

For example, if mailDelay = 30 and mailDelayFactor = 1.2, for tile two’s mail workload, the mailDelay is 36. For tile three’s mail workload, the mailDelay is 43 (36 * 1.2 = 43).

You can experiment with these settings since changing any of them does not affect compliance. Increasing these delays may be useful when optimizing benchmark ramp up as the harness deploys the workload VMs. However, increasing delays may affect the score.

4.3 Start a measurement¶

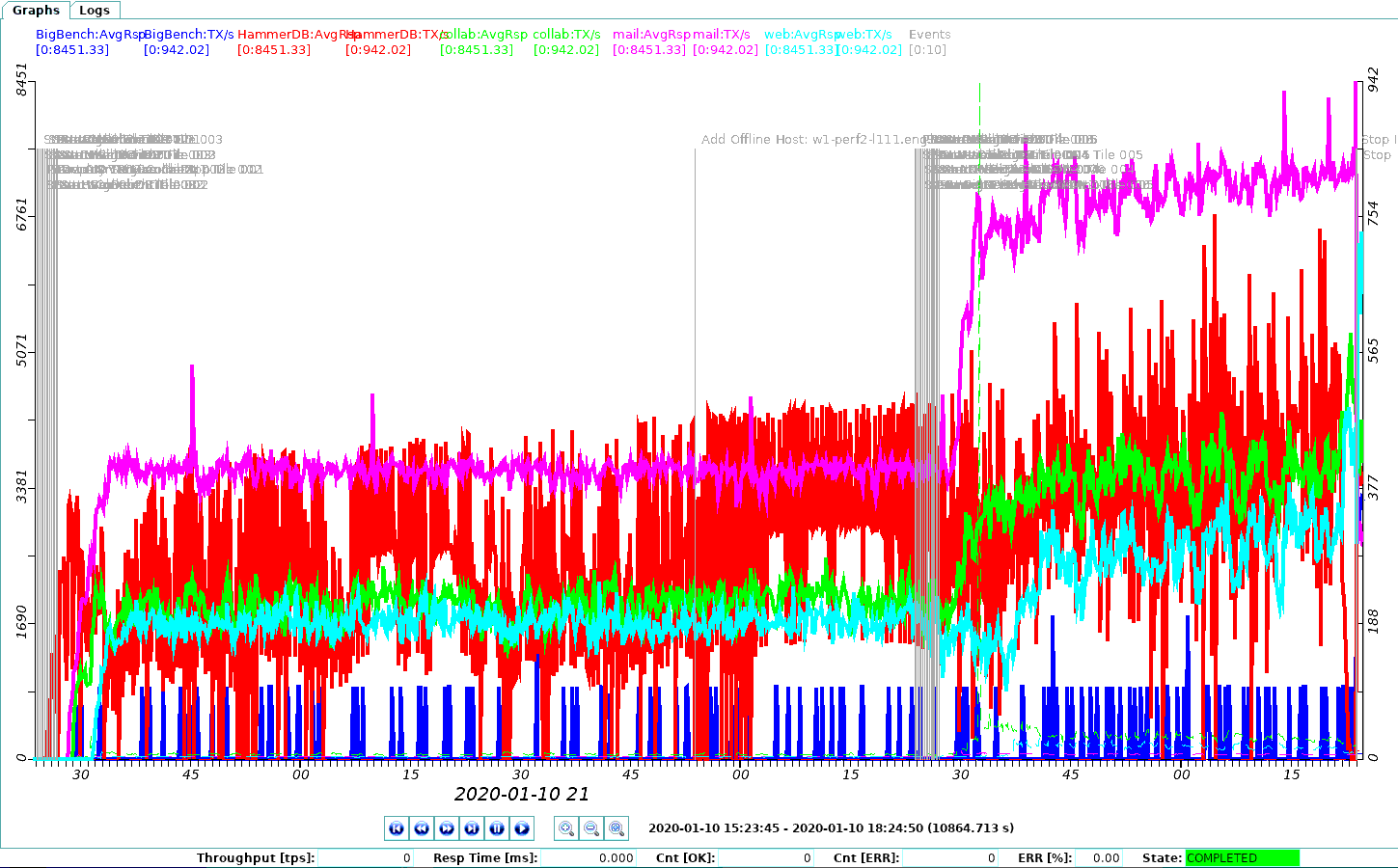

4.3.1 Run runspecvirt.sh and launch the LiveView GUI¶

Run runspecvirt.sh. This is a wrapper script that prepares the SUT for the benchmark to run. To run a measurement:

cd $CP_BIN ./runspecvirt.sh [testDescription] [nogui]

testDescription is an optional descriptive name appended to end of the results folder name, for example, “3tiles-4hosts”.

The name of the benchmark run is its date and time stamp plus the optional testDescription, for example, “2020-07-14_23-33-11_3tiles-4hosts”.

nogui is an optional parameter if you want to run the measurement without invoking the LiveView GUI.

runspecvirt.sh calls prepTestRun.sh to initialize the VMs and clients then invokes the LiveView GUI.

When using LiveView, ensure its status on the bottom right corner states CONNECTED.

You can analyze the throughput or event tags for any chosen interval length within a measurement. To select an interval, left-click and hold to draw a box around the region to zoom in. You can repeat this multiple times until you reach the maximum zoom.

Clicking the “+” (plus icon) zooms in on the current active portion of the run.

To zoom out, click the “-” (minus icon) at the bottom the graph.

When you zoom out, you can see measurement start time, current time, and elapsed time under the graph.

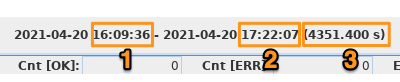

In the example above:

Measurement start time is 16:09:36

Current time is 17:22:07

Elapsed time since start of measurement is 4351.400 seconds

4.3.2 Run a measurement manually with LiveView¶

Run the testbed preparation script:

cd $CP_BIN ./prepTestRun.sh

Run the hypervisor-specific initialization script if using:

/export/home/cp/config/workloads/specvirt/HV_Operations/{virtVendor}/userInit.sh

Launch LiveView:

./liveview.sh

Click File -> Workload. Optionally add a measurement description in the Run Name field, then click Submit.

4.3.3 Monitor a measurement from the CLI¶

After the workloads start, you can monitor transactions and response times per tile while running a measurement. The harness launches $CP_BIN/client-log-summary.sh to track transactions and response times by workload. After you start a measurement, issue:

tail -f ../log/log-poll-<testDescription>.log

Output is similar to:

Settings are: partialPhase3=, numTilesPhase3=2, fractionalTilePhase3=0

Tile 001 stats:

15.07.2020 21:02:54 INFO [Thread-46] svdc-t001-collab1 collab: Avg RT= 192

15.07.2020 21:02:54 INFO [Thread-46] svdc-t001-collab1 collab: Txns/sec= 52

15.07.2020 21:02:53 INFO [Thread-33] svdc-t001-collab2 collab: Avg RT= 148

15.07.2020 21:02:53 INFO [Thread-33] svdc-t001-collab2 collab: Txns/sec= 67

15.07.2020 21:02:52 INFO [Thread-11] svdc-t001-mail mail: Avg RT= 68

15.07.2020 21:02:52 INFO [Thread-11] svdc-t001-mail mail: Txns/sec= 128

15.07.2020 21:02:52 INFO [Thread-47] svdc-t001-web webSUT: Avg RT= 106

15.07.2020 21:02:52 INFO [Thread-47] svdc-t001-web webSUT: Txns/sec= 86

15.07.2020 21:00:00 INFO [Thread-7] BigBench: COMPLETED QUERY 20. Query Response Time = 238 seconds.

15.07.2020 21:02:54 INFO [Thread-7] HammerDB: NewOrderTPS= 292.80

Tile 002 stats:

15.07.2020 21:02:58 INFO [Thread-66] svdc-t002-collab1 collab: Avg RT= 155

15.07.2020 21:02:58 INFO [Thread-66] svdc-t002-collab1 collab: Txns/sec= 64

15.07.2020 21:02:56 INFO [Thread-55] svdc-t002-collab2 collab: Avg RT= 190

15.07.2020 21:02:56 INFO [Thread-55] svdc-t002-collab2 collab: Txns/sec= 52

15.07.2020 21:02:55 INFO [Thread-31] svdc-t002-mail mail: Avg RT= 69

15.07.2020 21:02:55 INFO [Thread-31] svdc-t002-mail mail: Txns/sec= 126

15.07.2020 21:02:59 INFO [Thread-67] svdc-t002-web webSUT: Avg RT= 110

15.07.2020 21:02:59 INFO [Thread-67] svdc-t002-web webSUT: Txns/sec= 84

15.07.2020 20:59:34 INFO [Thread-7] BigBench: COMPLETED QUERY 19. Query Response Time = 340 seconds.

15.07.2020 21:02:54 INFO [Thread-7] HammerDB: NewOrderTPS= 295.40

The output shows a snapshot of the workload transactions and their response times for a two-tile measurement. For a descrption of all deployment and measurement logs, see Appendx B.

5.0 View measurement results¶

Once all run intervals complete successfully, the benchmark collects the harness and workload logs. The harness then generates a raw (.raw) file, a full disclosure report (FDR) (.html), and a submission (.sub) file. After each measurement, the harness stops the workload processes on the clients, collects the workload and harness logs, and copies them to the measurement’s results directory.

5.1 View results files¶

Results are stored in $CP_RESULTS (/export/home/cp/results/specvirt). The measurement’s results directory is named with the date stamp of at the start of the measurement with the optional <testDescription> appended (for example, 2021-02-23_16-23-48_2-3tile-3hr). The results directory contains the following directories:

Files |

Description |

|---|---|

specvirt-datacenter-<testDescription>.raw |

Raw file |

specvirt-datacenter-<testDescription>-perf.html |

Full disclosure report |

specvirt-datacenter-<testDescription>.sub |

Submission file |

5.2 Regenerate the HTML report (FDR)¶

If you plan to submit results to SPEC for review and publication, check that the values you entered in Testbed.config are correct. That is, ensure the Notes section contains all details required to reproduce the benchmark environment, review for typographical errors, and verify the product names and version numbers. If you receive feedback from SPEC that requires you to make any changes, you need to regenerate the FDR.

Modify the properties in the raw file rather than in Control.config or Testbed.config. You may edit only the fields above the DO NOT EDIT line in the raw file.

Once edited, to regenerate the formatted results using the edited raw file, invoke the reporter and pass it the name of the raw file as a parameter. The reporter first creates a backup of the original raw file to specvirt-datacenter-<testDescription>.raw.backup then generates a new raw file with:

java -jar reporter.jar -r <raw_file_name>

5.3 Regenerate the submission (.sub) file if needed¶

If you have a submission file and want to recreate the raw file from which it was generated, you can invoke:

java -jar reporter.jar -s <sub_file_name>

This strips out the extra characters from the submission file so that you can view or work with the original raw file. This is the recommended method for editing a file post-submission because it ensures you are not working with an outdated version of the corresponding raw file and potentially introducing previously corrected errors into the “corrected” submission file.

See Section 6.0 in the SPECvirt Datacenter 2021 Run and Reporting Rules for instructions on submitting a measurement for SPEC publication.

6.0 Control.config experimental options¶

Use this section to change benchmark and workload parameters for ease of benchmark tuning and for research purposes.

For troubleshooting and tuning, you can edit fixed Control.config parameters such as run time and workloads to run. Editing these parameters result in a non-compliant run but can help when finding SUT maximum load.

Remember to reset these to their defaults before attempting a compliant run. See Appendix for the list of Control.config parameters.

6.1 Change measurement durations¶

As you debug and tune your environment to achieve maximum load, you might want to reduce measurement time. For example, to run a one-hour measurement, you can set:

runTime = 3600

phase2StartTime = 1800

phase3StartTime = 2400

6.2 Disable/Enable workloads¶

You can specify which workloads to run. For example, if you want to run only the BigBench workload, you can set:

doAIO = 0

doHammerDB = 0

doBigBench = 1

6.3 Disable/Enable support file collection¶

After running a measurement, the harness creates an archive of supporting files describing the SUT configuration and measurement parameters. Since gathering post-measurement supporting data can be time-consuming, to disable SUT configuration data collection, you can set:

collectSupportFiles = 0

Appendix - Control.config¶

The following is the contents of the $CP_BIN/Control.config file:

###########################################################################

# #

# Copyright (c) 2021 Standard Performance Evaluation Corporation (SPEC). #

# All rights reserved. #

# #

###########################################################################

# SPECvirt Datacenter 2021 benchmark control file: 4/3/21

# numTilesPhase1 : Number of Tiles used for Phase1 Throughput

# For full Tiles, use the whole number only (e.g., "5", not "5.0")

# For Partial Tiles, increments of .2 will add workloads in the following order:

# mail=Tilenum.2 , mail+web=Tilenum.4 ,

# mail+web+collab=Tilenum.6 , mail+web+collab+HammerDB=Tilenum.8

# E.g., "numTilesPhase1 = 6.4" means 6 full Tiles plus a partial 7th Tile

# containing only mail + web workloads.

numTilesPhase1 = 1

# numTilesPhase3 : The maximum (total) number of Tiles that will be run on the SUT

# Partial Tiles are allowed for numTilesPhase3. (*See comments for 'numTilesPhase1' above)

numTilesPhase3 = 1

# Virtualization environment running on the SUT (vSphere or RHV)

virtVendor =

# The IP, FQDN, and credentials for the management server (e.g., the vCenter or RHV-M server)

mgmtServerIP =

mgmtServerHostname =

mgmtServerURL =

virtUser =

virtPassword =

# The name and location of the certificate for the management server if needed

virtCert =

# The hostname(s) and credentials for the servers that will be added to the cluster

# during phase 2 of the Measurement Interval (MI). Specify one host for every

# four-node cluster

offlineHost_1 =

#offlineHost_2 =

# Name of the template / appliance to deploy for SUT VMs

templateName =

# Name of the template / appliance to deploy for CLIENT VMs

clientTemplateName =

# Name of the cluster for SUT VMs

cluster =

# Name of the network for SUT VMs

network =

# Name of the cluster for CLIENT VMs

clientCluster =

# Name of the network for CLIENT VMs

clientNetwork =

# Number of host nodes in cluster. Must be a multiple of four

numHosts = 4

# Name of the storage pools used for SUT VMs. If multiple storage pools are used

# for a given workload type, use multiple lines with a single storage pool per line

# and increment the number in brackets. For example:

#

# mailStoragePool[0] = mailPool1

# mailStoragePool[1] = mailPool2

# mailStoragePool[2] = mailPool3

#

# VMs of the same workload type will be evenly placed across all defined storage pools

# for that workload, based on the tile number.

#

# *Note, if different storage names are listed, they must be accessible by all hosts using the

# same access method(s)

mailStoragePool[0] =

webStoragePool[0] =

collabStoragePool[0] =

HDBstoragePool[0] =

BBstoragePool[0] =

# Name of the storagePool for CLIENT VMs. If multiple storage pools are used, use

# multiple lines with a single storage pool per line and increment the number in

# brackets. For example:

#

# clientStoragePool[0] = clientPool1

# clientStoragePool[1] = clientPool2

#

# Client VMs will be evenly distributed across all defined storage pools, based on the tile number.

clientStoragePool[0] =

# MAC Address Format - The MAC address, in particular, the first 3 sets of hex values

# to prefix MAC addresses used for deployments

# By default, the three hex prefixes will be 42:44:49. Change these if needed.

# (e.g., If vNICs in your network environment already contain vNICs using these values )

MACAddressPrefix = 42:44:49:

# IP address prefix (for example '172.23.' starts with Tile 1 IP's at 172.23.1.1

# *Note, the format is <pre>.<fix>.<tileNumber>.<workload/VM type>

IPAddressPrefix = 172.23.

# Disk device to be used for secondary data disk on workload VMs. Device is assumed to be in

# /dev within the VM's guest environment. Partitions and/or filesystems will be configured on

# this device and any existing data will be overwritten. Examples of vmDataDiskDevice are:

# "sdb" (default) and "vdb".

vmDataDiskDevice = sdb

################

# The number of vCPUs assigned to the workload VMs during their deployment

################

# Number of vCPUs assigned to the departmental (mail, web, collab1, collab2) workloads VMs.

# (Default value = 4 vCPUs for each workload)

vCpuAIO = 4

# Number of vCPUs assigned to HammerDB Appserver. (Default = 2 vCPUs)

vCpuHapp = 2

# Number of vCPUs assigned to HammerDB Database. (Default = 8 vCPUs)

vCpuHdb = 8

# Number of vCPUs assigned to BigBench NameNode. (Default = 8 vCPUs)

vCpuBBnn = 8

# Number of vCPUs assigned to BigBench DataNode. (Default = 8 vCPUs)

vCpuBBdn = 8

# Number of vCPUs assigned to BigBench Database. (Default = 8 vCPUs)

vCpuBBdb = 8

# Number of vCPUs assigned to the Client VMs. (Default = 4)

vCpuClient = 4

# Delay to allow the "startRun.sh" script (which collects pre-testrun SUT information) complete before starting the testrun.

startDelay = 180

# Delay before starting each workload (*mailDelay will always use zero for first Tile,

# regardless of value below)

mailDelay = 30

webDelay = 30

collab1Delay = 30

collab2Delay = 1

HDBDelay = 30

bigBenchDelay = 60

# DelayFactors provide a mecahnism to extend the workload deployment delay by an increasing

# amount per Tile.

# Example, if "mailDelay = 30" and "mailDelayFactor = 1.2", for Tile2 mail VM, the applied

# mailDelay would be 36. For Tile3 mail VM, the mailDelay would be 43 (36 * 1.2 = 43), etc...

mailDelayFactor = 1.0

webDelayFactor = 1.0

collab1DelayFactor = 1.0

collab2DelayFactor = 1.0

HDBDelayFactor = 1.0

bigBenchDelayFactor = 1.0

# Debug level for CloudPerf director & workload agents (logs reported in /export/home/cp/log

# ... and /export/home/cp/results/specvirt/<date-time_run-ID>)

# Valid values are: [1..9]

debugLevel = 3

# Benchmarkers can create automation scripts that run immediately prior or after the

# test to collect the information while the testbed is still in the same configuration

# as it was during the test. Scripts located in

# /export/home/cp/config/workloads/specvirt/HV_Operations/$virtVendor

# User-specific automation script to run at beginning of test

initScript = "userInit.sh"

# User-specific automation script to run at end of test

exitScript = "userExit.sh"

############# Fixed Settings ########################################

# Changing any of these values will result in a non-compliant test!!!

#

# Revision of benchmark template image

templateVersion = 1.0

# Duration of the Throughput Measurement Interval

runTime = 10800

# Set doAIO = 0 to remove web, mail, and collab workloads from the Throughput MI

doAIO = 1

# Set doHammerDB = 0 to remove HammerDB load from the Throughput MI

doHammerDB = 1

# Set doBigBench = 0 to remove BigBench load from the Throughput MI

doBigBench = 1

phase2StartTime = 5400

phase3StartTime = 7200

WORKLOAD_SCORE_REF_VALUE[0] = 1414780

WORKLOAD_SCORE_REF_VALUE[1] = 978246

WORKLOAD_SCORE_REF_VALUE[2] = 1476590

WORKLOAD_SCORE_REF_VALUE[3] = 2576560

WORKLOAD_SCORE_REF_VALUE[4] = 45

# Set collectSupportFiles = 0 to not collect the supporting tarball files

collectSupportFiles = 1

Copyright 2021 Standard Performance Evaluation Corporation (SPEC). All rights reserved.