SPECstorage™ Solution 2020_ai_image Result

Copyright © 2016-2020 Standard Performance Evaluation Corporation

|

SPECstorage™ Solution 2020_ai_image ResultCopyright © 2016-2020 Standard Performance Evaluation Corporation |

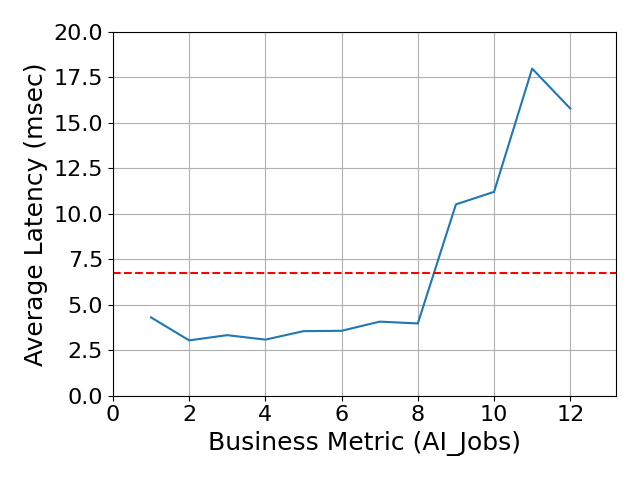

| SPEC Storage(TM) Subcommittee | SPECstorage Solution 2020_ai_image = 12 AI_Jobs |

|---|---|

| Reference Submission | Overall Response Time = 6.77 msec |

|

|

| Reference Submission | |

|---|---|

| Tested by | SPEC Storage(TM) Subcommittee | Hardware Available | 12/2020 | Software Available | 12/2020 | Date Tested | 12/2020 | License Number | 0 | Licensee Locations | Newton, Massachusetts |

The SPEC Storage(TM) Solution 2020 Reference Solution consists of an FreeNAS -

Xeon - 256 GB, based on Xeon - 20 core processor, connected to VMware 8 nodes

cluster using the NFSv3 protocol over a 10GbE Ethernet network.

The

FreeNAS server, provides IO/s from 2 file systems, and 2 volumes. The FreeNAS

is running in iozone.org lab. The FreeNAS server uses a dual socket storage

processor, full 12 Gb SAS back end connectivity and includes 40 SAS 10020 RPM

Disk Drives 8 TB - SAS 12Gb/s. The storage server is running FreeOS

11.3-RELEASE-p14 using NFSv3 server and 2 10GbE Ethernet networking.

| Item No | Qty | Type | Vendor | Model/Name | Description |

|---|---|---|---|---|---|

| 1 | 1 | NFSv3 freenas | FreeNAS | FreeNAS | 1: 200GB SSD device for logging; 2: 2x200 GB SSD devices (ARC cache); 3: 2xSAS controllers; 4: 40x450GB SAS 12GBps, 10k RPM HDD |

| 2 | 1 | Load Generator Server1 | Generic | Dual Socket AMD Server | Generic Server - Dual Quad core Opteron 3GHz-32GB-2x10GbE |

| 3 | 1 | Load Generator Server2 | Generic | Dual Socket Xeon Server | Generic Server - Dual Xeon E5-2603V3 1.7GHz32GB-2x10GbE |

| 4 | 1 | Ethernet Switch | Quanta | Quanta | 24 Port 10GbE Ethernet Switch |

| Item No | Component | Type | Name and Version | Description |

|---|---|---|---|---|

| 1 | FreeNAS | FreeOS | 11.3-RELEASE-p14 | Software running on the FreeNAS hardware and external Acme disk enclosure |

| 2 | VMware Hypervisor | ESXi Server | 5.5 (VM version 9) | The 2 ESX servers were running VMware ESXi 5.5 Hypervisor and were configured with 4 VM's EA |

| 3 | Load Generators | Linux | CentOS 7.2 64-bit | Each of the 2 VMware ESXi 5.5 Hypervisor was configured to run 4 VM's running Linux OS total 8 VM's |

| Load Generator Virtual Machines | Parameter Name | Value | Description |

|---|---|---|

| MTU | 1500 | Maximum Transfer Unit |

The Ports' MTU on the Load Generators, Network Switch and Storage Servers were set to default MTU=1500

| N/A | Parameter Name | Value | Description |

|---|---|---|

| N/A | N/A | N/A |

No software tunings were used - default NFS mount options were used.

No opaque services were in use.

| Item No | Description | Data Protection | Stable Storage | Qty |

|---|---|---|---|---|

| 1 | FreeNAS server: 10kRPM 450GB SAS Drives | RAID1 | Yes | 40 |

| 2 | Virtual Machine: 16GB SAS Drives for OS | None | Yes | 8 |

| Number of Filesystems | 2 | Total Capacity | 8 TiB | Filesystem Type | NFSv3 |

|---|

The file systems were created on the FreeNAS using all default parameters.

The VM's storage was configured on the ESXi servers and shared from a single

450GB SAS 10K RPM HDD.

Every two drives were mirrored (across

enclosures) then aggregated into a pool. The two filesystems came from this

pool.

| Item No | Transport Type | Number of Ports Used | Notes |

|---|---|---|---|

| 1 | 10 Gbit on Storage Node | 2 | 2 ports were connected and used for the test |

| 2 | 10 Gbit on Load Generators | 4 | 2 ports were connected on each ESXi server and split into 8 VM's using an internal Private network |

All the load generator VM clients were connected to an internal SW switch inside each ESXi server. This internal switch was connected to the 10 GbE switch.

| Item No | Switch Name | Switch Type | Total Port Count | Used Port Count | Notes |

|---|---|---|---|---|---|

| 1 | Quanta LB6M | 10 GbE Ethernet ESXi Servers to Storage nodes interconnect | 24 | 6 | The VM's were connected to the 10 Gbit switch using a Private network on the ESXi |

| Item No | Qty | Type | Location | Description | Processing Function |

|---|---|---|---|---|---|

| 1 | 2 | Xeon | CPU | Xeon Processor v4 with 20 cores | NFSv3 Server |

| 2 | 8 | vCPU | CPU | Dual AMD Opteron v4 each with 4 cores | Load Generators |

The 2 ESXi servers were using the dual socket Xeon and AMD Opteron processors and the Load Generators VM's were configured with 2 cores each without hyperthreading.

| Description | Size in GiB | Number of Instances | Nonvolatile | Total GiB |

|---|---|---|---|---|

| FreeNAS main memory | 256 | 1 | V | 256 |

| NVRAM module | 16 | 1 | NV | 16 |

| Load generator VM memory | 6 | 8 | V | 48 | Grand Total Memory Gibibytes | 320 |

The FreeNAS storage controller has main memory that is used for the operating system and for caching filesystem data. It uses a 241 GiB partition to provide stable storage for writes that have not yet been written to disk.

The FreeNAS storage controller has main memory that is used for the operating system and for caching filesystem data. It uses a 241 GiB partition to provide stable storage for writes that have not yet been written to disk.

The system under test consisted of one FreeNAS storage node, connected by 2 10 GbE ports of a dual port NIC. Each storage node was configured with 2 10GbE network interfaces connected to a 10GbE switch. There were 8 load generating clients, each connected to the same Quanta Ethernet switch as the FreeNAS storage node.

None

Each load generating client mounted all the 2 file systems using NFSv3. All the clients mounted 2 file systems from the storage node. The order of the clients as used by the benchmark was round-robin distributed such that as the load scaled up, each additional process used the next file system. This ensured an even distribution of load over the network and among the 2 file systems configured on the storage node.

None.

None.

Generated on Wed Dec 16 14:09:30 2020 by SpecReport

Copyright © 2016-2020 Standard Performance Evaluation Corporation