SPEC SFS®2014_vdi Result

Copyright © 2016-2019 Standard Performance Evaluation Corporation

|

SPEC SFS®2014_vdi ResultCopyright © 2016-2019 Standard Performance Evaluation Corporation |

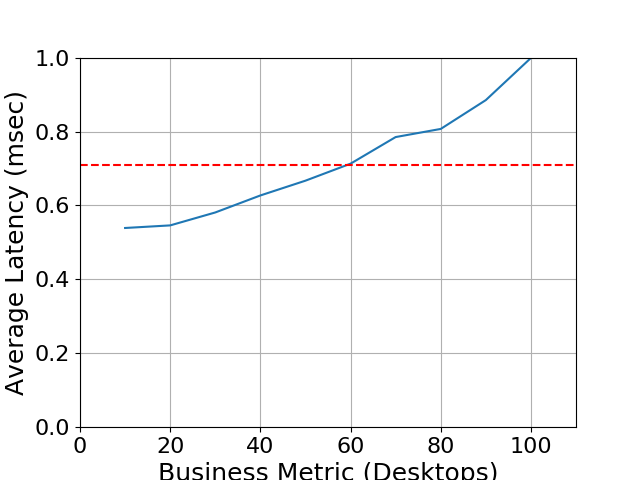

| SPEC SFS(R) Subcommittee | SPEC SFS2014_vdi = 100 Desktops |

|---|---|

| Reference submission | Overall Response Time = 0.71 msec |

|

|

| Reference submission | |

|---|---|

| Tested by | SPEC SFS(R) Subcommittee | Hardware Available | 12/2017 | Software Available | 12/2017 | Date Tested | 12/2017 | License Number | 55 | Licensee Locations | Hopkinton, Massachusetts |

The SPEC SFS(R) 2014 Reference Solution consists of a Dell PowerEdge R630 -

rack-mountable - Xeon E5-2640V4 2.4 GHz - 96 GB - 600 server, based on Intel

Xeon E5-2640V4 2.4 GHz - 8 core processor, connected to VMware 24 nodes cluster

using the NFSv3 protocol over an Ethernet network.

The PowerEdge R630

server, provides IO/s from 8 file systems, and 8 volumes. The PowerEdge R630

accelerates business and increases speed-to-market by providing scalable, high

performance storage for mission critical and highly transactional applications.

Based on the powerful family of Intel E5-2600 processors, the PowerEdge R630

uses All SSD Flash storage architecture for block, and file, and supports for

native NAS, and iSCSI protocols. Each PowerEdge R630 server uses a single

socket storage processors, full 12 Gb SAS back end connectivity and includes 12

SAS SSD Dell - solid state drive - 1.6 TB - SAS 12Gb/s. The storage server is

running Linux SLES12SP1 #2 SMP and using NFSv3 Linux server. A second PowerEdge

R630 is used in an Active-Passive mode as a failover server. The PowerEdge R640

is configured with 12 Dell Enterprise-class 2.5", 1.6TB Solid State Drives,

Serial Attached SCSI 12Gb/s drive technology and 4 10GbE Ethernet networking.

| Item No | Qty | Type | Vendor | Model/Name | Description |

|---|---|---|---|---|---|

| 1 | 2 | Storage Cluster Node | Dell | PowerEdge R630 | PowerEdge R630 - rack-mountable - single Xeon E5-2640V4 2.4 GHz-96GB-4x10GbE |

| 2 | 4 | Load Generator Servers | Dell | Dual Socket PowerEdge R430 Server | PowerEdge R430 - Dual Xeon E5-2603V3 1.7GHz24GB-2x10GbE |

| 3 | 2 | Ethernet Switch | Dell | PowerConnect 8024 | PowerConnect 8024 24 Port 10Gb Ethernet Switch (10GBASE-T) |

| Item No | Component | Type | Name and Version | Description |

|---|---|---|---|---|

| 1 | PowerEdge R630 | Linux | SLES12SP1 #2 SMP | The PowerEdge R630 were running Linux SUSE OS SLES 12 |

| 2 | VMware Hypervisor | ESXi Server | 5.1 (VM version 9) | The 4 PowerEdge R430 were running VMware ESXi 5.1 Hypervisor and were configured with 6 VM's EA |

| 3 | Load Generators | Linux | CentOS 7.2 64-bit | The VMware ESXi 5.1 Hypervisor 5.1 was configured to run 6 VM's running Linux OS total 24 VM's |

| Load Generator Virtual Machine | Parameter Name | Value | Description |

|---|---|---|

| MTU | 9000 | Maximum Transfer Unit |

The Ports' MTU on the Load Generators, Network Switch and Storage Servers were set to Jumbo MTU=9000

| n/a | Parameter Name | Value | Description |

|---|---|---|

| n/a | n/a | n/a |

No software tunings were used - default NFS mount options were used.

No opaque services were in use.

| Item No | Description | Data Protection | Stable Storage | Qty |

|---|---|---|---|---|

| 1 | PowerEdge R630 server: 1.6TB SAS SSD Drives | RAID5 3+1 | Yes | 12 |

| 2 | Virtual Machine: 18GB SAS Drives | None | Yes | 24 |

| Number of Filesystems | 8 | Total Capacity | 8 TB | Filesystem Type | NFSv3 |

|---|

The file system was created on the PowerEdge R630 using all default parameters.

The VM's storage was configured on the ESXi server and shared from a single 600GB SAS 15K RPM HDD.

| Item No | Transport Type | Number of Ports Used | Notes |

|---|---|---|---|

| 1 | 10 Gbit on Storage Node | 4 | 4 ports were connected and used for test and 4 stdby |

| 2 | 10 Gbit on Load Generators | 8 | 2 ports were connected on each ESXi server and split into 6 VM's using an internal Private network |

All load generator VM clients were connected to an internal SW switch inside each ESXi server. This internal switch was connected to the 10 GbE switch.

| Item No | Switch Name | Switch Type | Total Port Count | Used Port Count | Notes |

|---|---|---|---|---|---|

| 1 | PowerConnect 8024 | 10 GbE Ethernet ESXi Servers to Storage nodes interconnect | 48 | 24 | The VM's were connected to the 10 Gbit switch using a Private network on the ESXi |

| Item No | Qty | Type | Location | Description | Processing Function |

|---|---|---|---|---|---|

| 1 | 1 | Xeon E5-2640 v4 | CPU | Intel Xeon Processor E5-2640 v4 with 8 cores | NFSv3 Server |

| 2 | 8 | Xeon E5-2600 v4 | CPU | Intel Xeon Processor E5-2600 v4 with 6 cores | Load Generators |

The 4 ESXi servers (on PowerEdge R430) were using the dual socket E5-2600 v4 and the Load Generators VM's were configured with 2 cores each without hyperthreading.

| Description | Size in GiB | Number of Instances | Nonvolatile | Total GiB |

|---|---|---|---|---|

| PowerEdge R630 main memory | 96 | 1 | V | 96 |

| PowerEdge R630 NVRAM module with Vault-to-SSD | 160 | 1 | NV | 160 |

| Load generator VM memory | 4 | 24 | V | 96 | Grand Total Memory Gibibytes | 352 |

Each PowerEdge R630 storage controller has main memory that is used for the operating system and for caching filesystem data. It uses a 160GiB partition of one SSD device to provide stable storage for writes that have not yet been written to disk.

Each PowerEdge R630 storage node is equipped with a nvram journal that stores writes to the local SSD disks. The nvram mirror data to a partition of SSD flash device in the event of power-loss.

The system under test consisted of 2 PowerEdge R630 storage nodes, 1U each, configured as Active-StdBy, connected by 4 10 GbE ports of a 4 ports NIC. Each storage node was configured with 4 10GbE network interfaces connected to a 10GbE switch. There were 24 load generating clients, each connected to the same PowerConnect 8024 Ethernet switch as the PowerEdge R630 storage nodes.

None

Each load generating client mounted all the 8 file systems using NFSv3. Because there is a single active storage node, all the clients mounted all 8 file systems from the storage node. The order of the clients as used by the benchmark was round-robin distributed such that as the load scaled up, each additional process used the next file system. This ensured an even distribution of load over the network and among the 8 file systems configured on the storage node.

None

None

Generated on Wed Mar 13 16:56:56 2019 by SpecReport

Copyright © 2016-2019 Standard Performance Evaluation Corporation