SPEC SFS®2014_vda Result

Copyright © 2016-2019 Standard Performance Evaluation Corporation

|

SPEC SFS®2014_vda ResultCopyright © 2016-2019 Standard Performance Evaluation Corporation |

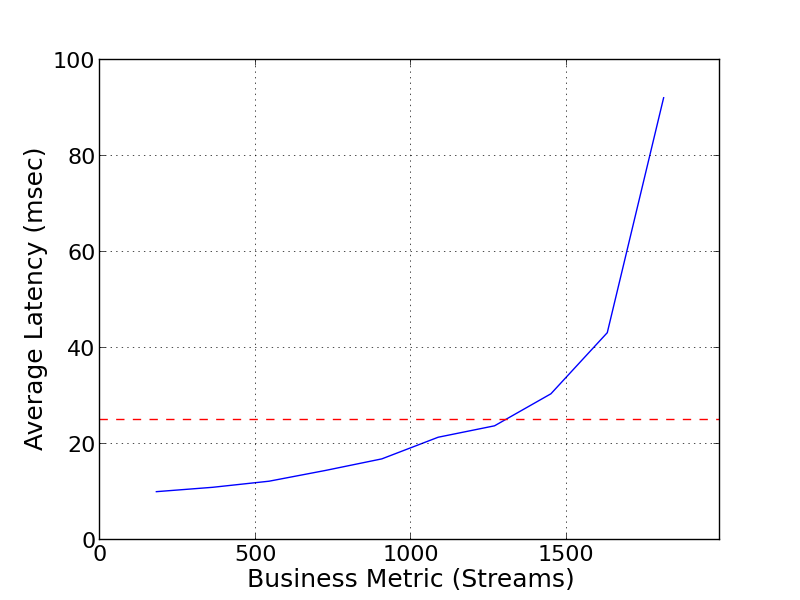

| Cisco Systems Inc. | SPEC SFS2014_vda = 1810 Streams |

|---|---|

| Cisco UCS S3260 with IBM Spectrum Scale 4.2.2 | Overall Response Time = 24.95 msec |

|

|

| Cisco UCS S3260 with IBM Spectrum Scale 4.2.2 | |

|---|---|

| Tested by | Cisco Systems Inc. | Hardware Available | November 2016 | Software Available | January 2017 | Date Tested | July 2017 | License Number | 9019 | Licensee Locations | San Jose, CA USA |

Cisco UCS Integrated Infrastructure

Cisco Unified Computing System

(UCS) is the first truly unified data center platform that combines industry-

standard, x86-architecture servers with network and storage access into a

single system. The system is intelligent infrastructure that is automatically

configured through integrated, model-based management to simplify and

accelerate deployment of all kinds of applications. The system's

x86-architecture rack and blade servers are powered exclusively by Intel(R)

Xeon(R) processors and enhanced with Cisco innovations. These innovations

include built-in virtual interface cards (VICs), leading memory capacity, and

the capability to abstract and automatically configure the server state.

Cisco's enterprise-class servers deliver world-record performance to power

mission-critical workloads. Cisco UCS is integrated with a standards-based,

high-bandwidth, low-latency, virtualization-aware unified fabric, with a new

generation of Cisco UCS fabric enabling 40 Gbps.

Cisco UCS S3260

Servers

The Cisco UCS S3260 Storage Server is a high-density modular

storage server designed to deliver efficient, industry-leading storage for

data-intensive workloads. The S3260 is a modular chassis with dual server nodes

(two servers per chassis) and up to 60 large-form-factor (LFF) drives in a 4RU

form factor.

IBM Spectrum Scale

Spectrum Scale provides

unified file and object software-defined storage for high performance, large

scale workloads. Spectrum Scale includes the protocols, services and

performance, Technical Computing, Big Data, HDFS and business critical content

repositories. IBM Spectrum Scale provides world-class storage management with

extreme scalability, flash accelerated performance, and automatic policy-based

storage tiering from flash through disk to tape, reducing storage costs up to

90% while improving security and management efficiency in big data and

analytics environments.

| Item No | Qty | Type | Vendor | Model/Name | Description |

|---|---|---|---|---|---|

| 1 | 5 | Server Chassis | Cisco | UCS S3260 Chassis | The Cisco UCS S3260 Chassis can support upto two server nodes and Fifty-six drives, or 1 server node and sixty drives, in a compact 4-rack-unit (4RU) form factor, with 4 x Cisco UCS 1050W AC Power Supply |

| 2 | 10 | Server node, Spectrum Scale Node | Cisco | UCS S3260 M4 Server Node | Cisco UCS S3260 M4 servers, each with: 2 X Intel Xeon processors E5-2680 v4 (28 cores per node), 256 GB of memory (16x16GB 2400MHz DIMMs), Cisco UCS C3000 RAID Controller w 4G RAID Cache |

| 3 | 10 | System IO Controller with VIC 1300 | Cisco | S3260 SIOC | Cisco UCS S3260 SIOC with integrated Cisco UCS VIC 1300, one per server node |

| 4 | 140 | Storage HDD, 8TB NL-SAS 7200 RPM | Cisco | UCS HD8TB | 8TB 7200 RPM drives for storage, fourteen per server node. Please note, on a fully populated chassis with two server nodes, we can have twenty-eight drive per server node |

| 5 | 1 | Blade Server Chassis | Cisco | UCS 5108 | The Cisco UCS 5108 Blade Server Chassis features flexible bay configurations for blade servers. It can support up to eight half-width blades, up to four full-width blades, or up to two full-width double-height blades in a compact 6-rack-unit (6RU) form factor |

| 6 | 8 | Blade Server, Spectrum Scale Node (Clients) | Cisco | UCS B200 M4 | UCS B200 M4 Blade Servers, each with: 2X Intel Xeon processors E5-2660 v3 (20 core per node) 256 GB of memory (16x16GB 2133MHz DIMMs) |

| 7 | 2 | Fabric Extender | Cisco | UCS 2304 | Cisco UCS 2300 Series Fabric Extenders can support up to four 40-Gbps unified fabric uplinks per fabric extender connecting Fabric Interconnect. |

| 8 | 8 | Virtual Interface Card | Cisco | UCS VIC 1340 | The Cisco UCS Virtual Interface Card (VIC) 1340 is a 2-port, 40 Gigabit Ethernet, Fibre Channel over Ethernet (FCoE)-capable modular LAN on motherboard (mLOM) mezzanine adapter. |

| 9 | 2 | Fabric Interconnect | Cisco | UCS 6332 | Cisco UCS 6300 Series Fabric Interconnects support line-rate, lossless 40 Gigabit Ethernet and FCoE connectivity. |

| 10 | 1 | Cisco Nexus 40Gbps Switch | Cisco | Cisco Nexus 9332PQ | The Cisco Nexus 9332PQ Switch has 32 x 40 Gbps Quad Small Form Factor Pluggable Plus (QSFP+) ports. All ports are line rate, delivering 2.56 Tbps of throughput in a 1-rack-unit (1RU) form factor. |

| Item No | Component | Type | Name and Version | Description |

|---|---|---|---|---|

| 1 | Spectrum Scale Nodes | Spectrum Scale File System | 4.2.2 | The Spectrum Scale File System is a distributed file system that runs on the Cisco UCS S3260 servers to form a cluster. The cluster allows for the creation and management of single namespace file systems. |

| 2 | Spectrum Scale Nodes | Operating System | Red Hat Enterprise Linux 7.2 for x86_64 | The operating system on the Spectrum Scale nodes was 64-bit Red Hat Enterprise Linux version 7.2 |

| Spectrum Scale Nodes | Parameter Name | Value | Description |

|---|---|---|

| scaling_governor | performance | Sets the CPU frequency to performance |

| Intel Turbo Boost | Enabled | Enables the processor to run above its base operating frequency |

| Intel Hyper-Threading | Enabled | Enables multiple threads to run on each core, improving parallelization of computations performed |

| mtu | 9000 | Sets the Maximum Transmission Unit (MTU) to 9000 for improved throughput |

The main part of the hardware configuration was handled by Cisco UCS Mananger (UCSM). It supports creation of "Service Profiles", where in all the tuning parameters are specified with their respective values at the start. These service profiles are then replicated across servers and applied during deployment.

| Spectrum Scale Nodes | Parameter Name | Value | Description |

|---|---|---|

| maxMBpS | 10000 | Specifies an estimate of how many megabytes of data can be transferred per second into or out of a single node |

| pagepool | 64G | Specifies the size of the cache on each node |

| maxblocksize | 2M | Specifies the maximum file system block size |

| maxFilesToCache | 11M | Specifies the number of inodes to cache for recently used files that have been closed |

| workerThreads | 1024 | Controls the maximum number of concurrent file operations at any one instant, as well as the degree of concurrency for flushing dirty data and metadata in the background and for prefetching data and metadata |

| pagepoolMaxPhysMemPct | 90 | Percentage of physical memory that can be assigned to the page pool |

The configuration parameters were set using the "mmchconfig" command on one of the nodes in the cluster. The nodes used mostly default tuning parameters. A discussion of Spectrum Scale tuning can be found in the official documentation for the mmchconfig command and on the IBM developerWorks wiki.

There were no opaque services in use.

| Item No | Description | Data Protection | Stable Storage | Qty |

|---|---|---|---|---|

| 1 | Two 480GB Boot SSDs per server, used to store the operating system for each Spectrum Scale node. | RAID-1 | Yes | 20 |

| 2 | Fourteen 8TB Large Form Factor (LFF) HDD per server node, used as network Shared Drive for Spectrum Scale. The drives were configured on JBOD mode. | None | Yes | 140 |

| Number of Filesystems | 1 | Total Capacity | 1019 TiB | Filesystem Type | Spectrum Scale File System |

|---|

A single Spectrum Scale file system was created with: 2 MiB block size for data and metadata, 4 KiB inode size. The file system was spread across all of the Network Shared Disks (NSDs). Each client node mounted the file system. The file system parameters reflect values that might be used in a typical streaming environment. On each node, the operating system was hosted on the xfs filesystem.

Each UCS S3260 server node in the cluster was populated with 14 8TiB Large Form

Factor (LFF) HDDs. All the drives were configured in the JBOD mode. Each UCS

S3260 Chassis formed one failure group (FG) in Spectrum Scale File System.

The cluster used a single-tier architecture. The Spectrum Scale nodes

performed both file and block level operations. Each node had access to all of

the NSDs, so any file operation on a node was translated to a block operation

and serviced by the NSD server.

Other Notes (about BOOT SSDs):

Per Server: 2 x 480GiB physical drives, Protection: RAID-1, UsableGiB: 480GiB

| Item No | Transport Type | Number of Ports Used | Notes |

|---|---|---|---|

| 1 | 40GbE Network | 44 | Each S3260 server node connects to the Fabric Interconnect over a 40Gb Link. Thus there are ten 40Gb links to each Fabric Interconnect (configured in active-standby mode). The Cisco UCS Blade chassis connects to each Fabric Interconnect with four 40Gb links, with MTU=9000 |

The two Cisco UCS 6332 fabric interconnects function in HA mode

(active-standby) as 40 Gbps Ethernet switches.

Cisco UCS S3260 Server

nodes (NSD Servers): Each Cisco UCS S3260 Chassis has two server nodes. Each

S3260 server node has an S3260 SIOC with an integrated VIC 1300. This provides

40G connectivity for each server node to each Fabric Interconnect (configured

as active-standby).

Cisco UCS B200 M4 Blade servers (NSD Clients): Each

of the Cisco UCS B200 M4 blade servers comes with a Cisco UCS Virtual Interface

Card 1340. The two port card supports 40 GbE and FCoE. Physically the card

connects to the UCS 2304 fabric extenders via internal chassis connections. The

eight total ports from the fabric extenders connect to the UCS 6332 fabric

interconnects. The 40G links on the B200 M4 server blades were bonded in the

operating system to provide enhanced throughput for the NSD clients (the

traffic across Fabric Interconnects was through Cisco Nexus 9332PQ switch).

Detailed Description of the ports used:

2 x {Cisco UCS 6332}

in active/standby config.

total ports for each 6332 = (10 x S3260) + (4

x Blade chassis) + (4 x Uplinks) = 18 ports per 6332

For Nexus 9332

(upstream switch), 4 ports connected from each 6332. Thus total used ports =

8

Overall, total used ports = (18x2) + 8 = 44

| Item No | Switch Name | Switch Type | Total Port Count | Used Port Count | Notes |

|---|---|---|---|---|---|

| 1 | Cisco UCS 6332 #1 | 40 GbE | 32 | 18 | The Cisco UCS 6332 Fabric Interconnect forms the management and communication backbone for the servers. |

| 2 | Cisco UCS 6332 #2 | 40 GbE | 32 | 18 | The Cisco UCS 6332 Fabric Interconnect forms the management and communication backbone for the servers. |

| 3 | Cisco Nexus 9332 | 40 GbE | 32 | 8 | Cisco Nexus 9332PQ used as an upstream Switch |

| Item No | Qty | Type | Location | Description | Processing Function |

|---|---|---|---|---|---|

| 1 | 20 | CPU | Spectrum Scale server nodes | Intel Xeon CPU E5-2680 v4 @ 2.40GHz 14-core | Spectrum Scale nodes (server nodes) |

| 2 | 16 | CPU | Spectrum Scale client nodes | Intel Xeon CPU E5-2660 v3 @ 2.60GHz 10-core | Spectrum Scale Client nodes, load generator |

Each Spectrum Scale node in the system (client and server nodes) had two

physical processors each.

Each processor had multiple cores as

mentioned in the table above.

| Description | Size in GiB | Number of Instances | Nonvolatile | Total GiB |

|---|---|---|---|---|

| System memory in each spectrum scale node | 256 | 18 | V | 4608 | Grand Total Memory Gibibytes | 4608 |

Spectrum Scale reserves a portion of the physical memory in each node for file data and metadata caching. A portion of the memory is also reserved for buffers used for node to node communication.

The two fabric interconnects are configured in the active-standby mode providing complete High Availability (HA) to the entire cluster, ensuring complete stability/availability of the cluster in case of link failures. The storage is over 8TB Large Form Factor (LFF) HDDs. IBM Spectrum Scale is used for the file system over the underlying storage, 14 x 8TB Large Form Factor (LFF) HDDs per S3260 server. Stable writes and commit operations in Spectrum Scale are not acknowledged until the NSD server receives an acknowledgment of write completion from the underlying storage system.

The solution under test was a Cisco UCS S3260 with IBM Spectrum Scale cluster, a solution well suited for streaming environments. The NSD server nodes were S3260 servers, and UCS B200 M4 blade servers (fully populated in the blade server chassis) were used as load generators for the benchmark. Each node was connected over a 40Gb link to the two fabric interconnects (configured in HA mode).

The WARMUP_TIME for the benchmark was 600 seconds.

The 5 Cisco UCS S3260 chassis with two server nodes each were used for the storage (NSD servers). These servers were populated with 14 8TB LFF HDDs each. The 8 Cisco UCS B200 M4 blades were the load generators for the benchmark (client nodes). Each load generator had access to the single namespace Spectrum Scale file system. The benchmark accessed a single mount point on each load generator. The data requests to and from disk were serviced by the Spectrum Scale server nodes. All nodes were connected with 40Gb link connectivity across the cluster.

Cisco UCS is a trademark of Cisco Systems Inc. in the USA and/or other

countries.

IBM and IBM Spectrum Scale are trademarks of International

Business Machines Corp., registered in many jurisdictions worldwide.

Intel and Xeon are trademarks of the Intel Corporation in the U.S. and/or other

countries.

None

Generated on Wed Mar 13 16:48:49 2019 by SpecReport

Copyright © 2016-2019 Standard Performance Evaluation Corporation