SPEC SFS®2014_vda Result

Copyright © 2016-2019 Standard Performance Evaluation Corporation

|

SPEC SFS®2014_vda ResultCopyright © 2016-2019 Standard Performance Evaluation Corporation |

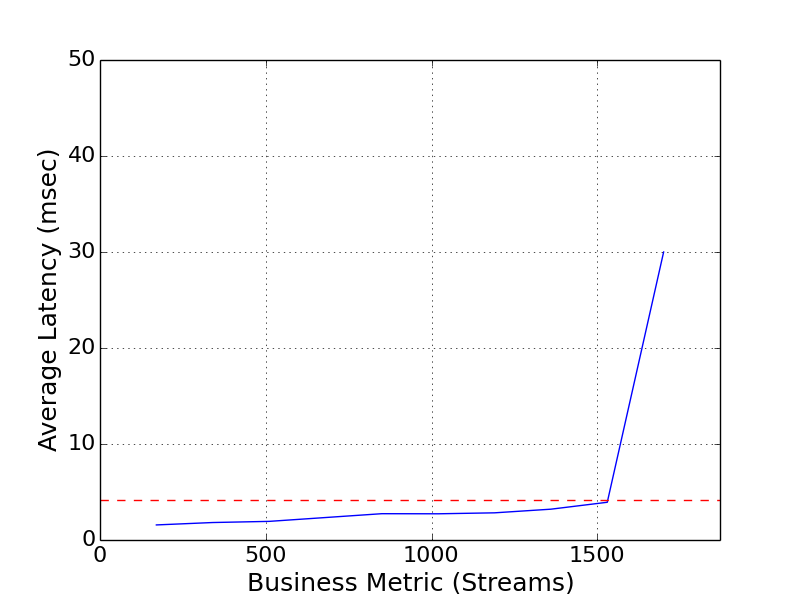

| IBM Corporation | SPEC SFS2014_vda = 1700 Streams |

|---|---|

| IBM DeepFlash 150 with Spectrum Scale 4.2.1 | Overall Response Time = 4.12 msec |

|

|

| IBM DeepFlash 150 with Spectrum Scale 4.2.1 | |

|---|---|

| Tested by | IBM Corporation | Hardware Available | July 2016 | Software Available | July 2016 | Date Tested | August 2016 | License Number | 11 | Licensee Locations | Almaden, CA USA |

IBM DeepFlash 150 provides an essential big-data building block for

petabyte-scale, cost-constrained, high-density and high-performance storage

environments. It delivers the response times of an all flash array with

extraordinarily competitive cost benefits. DeepFlash 150 is an ideal choice to

accelerate systems of big data and other workloads requiring high performance

and sustained throughput.

IBM Spectrum Scale provides unified file and

object software-defined storage for high performance, large scale workloads

on-premises or in the cloud. When deployed together, DeepFlash 150 and IBM

Spectrum Scale create a storage solution that provides optimal workload

flexibility, an extraordinary low-cost-to-performance ratio, and the data

lifecycle management and storage services required by enterprises grappling

with high-volume, high-velocity data challenges.

| Item No | Qty | Type | Vendor | Model/Name | Description |

|---|---|---|---|---|---|

| 1 | 2 | DeepFlash 150 | IBM | 9847-IF2 | Each DeepFlash 150 includes 64 Flash module storage slots. In this particular model half of the slots are filled, each with a 8 TB Flash module. |

| 2 | 12 | Spectrum Scale Nodes | Lenovo | x3650-M4 | Spectrum Scale client and server nodes. Lenovo model number 7915D3x. |

| 3 | 2 | InfiniBand Switch | Mellanox | SX6036 | 36-port non-blocking managed 56 Gbps InfiniBand/VPI SDN switch. |

| 4 | 1 | Ethernet Switch | SMC Networks | SMC8150L2 | 50-port 10/100/1000 Gbps Ethernet switch. |

| 5 | 20 | InfiniBand Adapter | Mellanox | MCX456A-F | 2-port PCI FDR InfiniBand adapter used in the Spectrum Scale client nodes. |

| 6 | 4 | InfiniBand Adapter | Mellanox | MCX354A-FCBT | 2-port PCI FDR InfiniBand adapter used in the Spectrum Scale server nodes. |

| 7 | 2 | Host Bus Adapter | Avago Technologies | SAS 9300-8e | 2-port PCI 12 Gbps SAS adapter used in one of the Spectrum Scale server nodes for attachment to the Deep Flash 150. |

| 8 | 2 | Host Bus Adapter | Avago Technologies | SAS 9305-16e | 4-port PCI 12 Gbps SAS adapter used in one of the Spectrum Scale server nodes for attachment to the Deep Flash 150. |

| Item No | Component | Type | Name and Version | Description |

|---|---|---|---|---|

| 1 | Spectrum Scale Nodes | Spectrum Scale File System | 4.2.1 | The Spectrum Scale File System is a distributed file system that runs on both the server nodes and client nodes to form a cluster. The cluster allows for the creation and management of single namespace file systems. |

| 2 | Spectrum Scale Nodes | Operating System | Red Hat Enterprise Linux 7.2 for x86_64 | The operating system on the client nodes was 64-bit Red Hat Enterprise Linux version 7.2. |

| 3 | DeepFlash 150 | Storage Server | 2.1.2 | The software runs on the IBM DeepFlash 150 and is installed with the included DFCLI tool. |

| Spectrum Scale Client Nodes | Parameter Name | Value | Description |

|---|---|---|

| verbsPorts | mlx5_0/1/1 mlx5_1/1/2 | InfiniBand device names and port numbers. |

| verbsRdma | enable | Enables InfiniBand RDMA transfers between Spectrum Scale client nodes and server nodes. |

| verbsRdmaSend | 1 | Enables the use of InfiniBand RDMA for most Spectrum Scale daemon-to-daemon communication. |

| Hyper-Threading | disabled | Disables the use of two threads per core in the CPU. The setting was changed in the BIOS menus of the client nodes. | Spectrum Scale Server Nodes | Parameter Name | Value | Description |

| verbsPorts | mlx4_0/1/1 mlx4_0/2/2 mlx4_1/1/1 mlx4_1/2/2 | InfiniBand device names and port numbers. |

| verbsRdma | enable | Enables InfiniBand RDMA transfers between Spectrum Scale client nodes and server nodes. |

| verbsRdmaSend | 1 | Enables the use of InfiniBand RDMA for most Spectrum Scale daemon-to-daemon communication. |

| scheduler | noop | Specifies the I/O scheduler used for the DeepFlash 150 block devices. |

| nr_requests | 32 | Specifies the I/O block layer request descriptors per request queue for DeepFlash 150 block devices. |

| Hyper-Threading | disabled | Disables the use of two threads per core in the CPU. The setting was changed in the BIOS menus of the server nodes. |

The first three configuration parameters were set using the "mmchconfig" command on one of the nodes in the cluster. The verbs settings in the table above allow for efficient use of the InfiniBand infrastructure. The settings determine when data are transferred over IP and when they are transferred using the verbs protocol. The InfiniBand traffic went through two switches, item 3 in the Bill of Materials. The block device parameters "scheduler" and "nr_requests" were set on the server nodes with echo commands for each DeepFlash device. The parameters can be found at "/sys/block/DEVICE/queue/{scheduler,nr_requests}", where DEVICE is the block device name. The last parameter disabled Hyper-Threading on the client and server nodes.

| Spectrum Scale - All Nodes | Parameter Name | Value | Description |

|---|---|---|

| ignorePrefetchLUNCount | yes | Specifies that only maxMBpS and not the number of LUNs should be used to dynamically allocate prefetch threads. |

| maxblocksize | 1M | Specifies the maximum file system block size. |

| maxMBpS | 10000 | Specifies an estimate of how many megabytes of data can be transferred per second into or out of a single node. |

| maxStatCache | 0 | Specifies the number of inodes to keep in the stat cache. |

| numaMemoryInterleave | yes | Enables memory interleaving on NUMA based systems. |

| pagepoolMaxPhysMemPct | 90 | Percentage of physical memory that can be assigned to the page pool |

| scatterBufferSize | 256K | Specifies the size of the scatter buffers. |

| workerThreads | 1024 | Controls the maximum number of concurrent file operations at any one instant, as well as the degree of concurrency for flushing dirty data and metadata in the background and for prefetching data and metadata. | Spectrum Scale - Server Nodes | Parameter Name | Value | Description |

| nsdBufSpace | 70 | Sets the percentage of the pagepool that is used for NSD buffers. |

| nsdMaxWorkerThreads | 3072 | Sets the maximum number of threads to use for block level I/O on the NSDs. |

| nsdMinWorkerThreads | 3072 | Sets the minimum number of threads to use for block level I/O on the NSDs. |

| nsdMultiQueue | 64 | Specifies the maximum number of queues to use for NSD I/O. |

| nsdThreadsPerDisk | 3 | Specifies the maximum number of threads to use per NSD. |

| nsdThreadsPerQueue | 48 | Specifies the maximum number of threads to use per NSD I/O queue. |

| nsdSmallThreadRatio | 1 | Specifies the ratio of small thread queues to small thread queues. |

| pagepool | 80G | Specifies the size of the cache on each node. On server nodes the page pool is used for NSD buffers. | Spectrum Scale - Client Nodes | Parameter Name | Value | Description |

| pagepool | 16G | Specifies the size of the cache on each node. |

The configuration parameters were set using the "mmchconfig" command on one of the nodes in the cluster. The parameters listed in the table above reflect values that might be used in a typical streaming environment with Linux nodes.

There were no opaque services in use.

| Item No | Description | Data Protection | Stable Storage | Qty |

|---|---|---|---|---|

| 1 | 64 7 TB LUNs from two DeepFlash 150 systems. | Spectrum Scale synchronous replication | Yes | 64 |

| 2 | 300 GB 10K mirrored HDD pair in Spectrum Scale client nodes used to store the OS. | RAID-1 | No | 10 |

| Number of Filesystems | 1 | Total Capacity | 245 TiB | Filesystem Type | Spectrum Scale File System |

|---|

A single Spectrum Scale file system was created with a 1 MiB block size for

data and metadata, a 4 KiB inode size, and a 32 MiB log size, 2 replicas for

data and metadata, and "relatime". The file system was spread across all of the

Network Shared Disks (NSDs).

The client nodes each had an ext4 file

system that hosted the operating system.

Each DeepFlash presented 32 JBOF LUNs to one of the server nodes. An NSD was

created from each LUN. All of the NSDs attached to the first server node were

placed in a failure group. All of the NSDs attached to the second server node

were placed in a second failure group. The file system was configured with 2

data and 2 metadata replicas. Therefore a copy of all data and metadata was

present on each DeepFlash 150.

The cluster used a two-tier

architecture. The client nodes perform file-level operations. The data requests

are transmitted to the server nodes. The server nodes perform the block-level

operations. In Spectrum Scale terminology the load generators are NSD clients

and the server nodes are NSD servers. The NSDs were the storage devices

specified when creating the Spectrum Scale file system.

| Item No | Transport Type | Number of Ports Used | Notes |

|---|---|---|---|

| 1 | 1 GbE cluster network | 12 | Each node connects to a 1 GbE administration network with MTU=1500 |

| 2 | FDR InfiniBand cluster network | 28 | Client nodes have 2 FDR links, and each server node has 4 FDR links to a shared FDR IB cluster network |

The 1 GbE network was used for administrative purposes. All benchmark traffic flowed through the Mellanox SX6036 InfiniBand switches. Each client node had two active InfiniBand ports. Each server node had four active InfiniBand ports. Each client node InfiniBand port was on a separate FDR fabric for RDMA connections between nodes.

| Item No | Switch Name | Switch Type | Total Port Count | Used Port Count | Notes |

|---|---|---|---|---|---|

| 1 | SMC 8150L2 | 10/100/1000 Gbps Ethernet | 50 | 12 | The default configuration was used on the switch. |

| 2 | Mellanox SX6036 #1 | FDR InfiniBand | 36 | 14 | The default configuration was used on the switch. |

| 3 | Mellanox SX6036 #2 | FDR InfiniBand | 36 | 14 | The default configuration was used on the switch. |

| Item No | Qty | Type | Location | Description | Processing Function |

|---|---|---|---|---|---|

| 1 | 20 | CPU | Spectrum Scale client nodes | Intel(R) Xeon(R) CPU E5-2630 v2 @ 2.60GHz 6-core | Spectrum Scale client, load generator, device drivers |

| 2 | 4 | CPU | Spectrum Scale server nodes | Intel Xeon CPU E5-2630 v2 @ 2.60GHz 6-core | Spectrum Scale NSD server, device drivers |

Each of the Spectrum Scale client nodes had 2 physical processors. Each processor had 6 cores with one thread per core.

| Description | Size in GiB | Number of Instances | Nonvolatile | Total GiB |

|---|---|---|---|---|

| Spectrum Scale node system memory | 128 | 12 | V | 1536 | Grand Total Memory Gibibytes | 1536 |

In the client nodes Spectrum Scale reserves a portion of the physical memory for file data and metadata caching. In the server nodes a portion of the physical memory is reserved for NSD buffers. A portion of the memory is also reserved for buffers used for node to node communication.

Stable writes and commit operations in Spectrum Scale are not acknowledged until the NSD server receives an acknowledgment of write completion from the underlying storage system, which in this case is the DeepFlash 150. The DeepFlash 150 does not have a cache, so writes are acknowledged once the data has been written to the flash cards.

The solution under test was a Spectrum Scale cluster optimized for streaming environments. The NSD client nodes were also the load generators for the benchmark. The benchmark was executed from one of the client nodes. All of the Spectrum Scale nodes were connected to a 1 GbE switch and two FDR InfiniBand switches. Each DeepFlash 150 was connected to a single server node via 4 12 Gbps SAS connections. Each server node had 2 SAS adapters. One server node had two Avago SAS 9300-8e adapters, and the other server two had two Avago SAS 9305-16e adapters.

None

The 10 Spectrum Scale client nodes were the load generators for the benchmark. Each load generator had access to the single namespace Spectrum Scale file system. The benchmark accessed a single mount point on each load generator. In turn each of mount points corresponded to a single shared base directory in the file system. The NSD clients process the file operations, and the data requests to and from disk were serviced by the Spectrum Scale server nodes.

IBM, IBM Spectrum Scale, and IBM DeepFlash 150 are trademarks of International

Business Machines Corp., registered in many jurisdictions worldwide.

Intel and Xeon are trademarks of the Intel Corporation in the U.S. and/or other

countries.

Mellanox is a registered trademark of Mellanox Ltd.

None

Generated on Wed Mar 13 16:52:34 2019 by SpecReport

Copyright © 2016-2019 Standard Performance Evaluation Corporation