SPECsfs2008_nfs.v3 Result

|

IBM Corporation

|

:

|

IBM Scale Out Network Attached Storage, Version 1.2

|

|

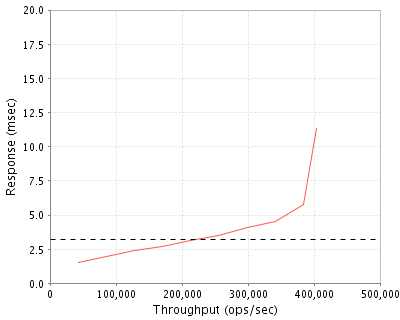

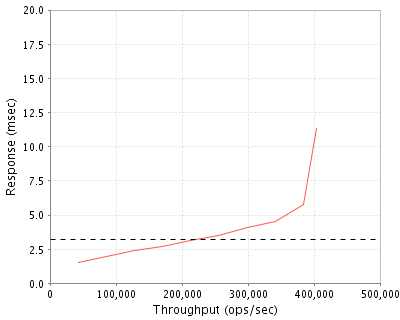

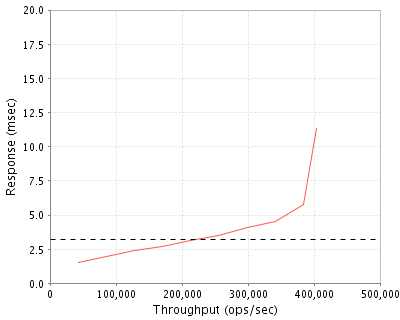

SPECsfs2008_nfs.v3

|

=

|

403326 Ops/Sec (Overall Response Time = 3.23 msec)

|

Performance

Throughput

(ops/sec)

|

Response

(msec)

|

|

42508

|

1.5

|

|

85093

|

2.0

|

|

127276

|

2.4

|

|

170410

|

2.7

|

|

213185

|

3.1

|

|

254902

|

3.5

|

|

298717

|

4.1

|

|

340996

|

4.5

|

|

384590

|

5.8

|

|

403326

|

11.3

|

|

|

Product and Test Information

|

Tested By

|

IBM Corporation

|

|

Product Name

|

IBM Scale Out Network Attached Storage, Version 1.2

|

|

Hardware Available

|

May 2011

|

|

Software Available

|

May 2011

|

|

Date Tested

|

February 2011

|

|

SFS License Number

|

11

|

|

Licensee Locations

|

Tucson, AZ USA

|

The IBM Scale Out Network Attached Storage (SONAS) system is IBM's storage system for customers requiring large scale NAS systems with extremely high levels of performance, scalability and availability. IBM designed the SONAS system for cloud storage and the petabtye age to help customers manage the extreme storage demands of today's digital environment. It supports multi-petabyte file systems with billions of files in each file system. In addition, it provides independent performance and capacity scaling allowing performance or capacity to be quickly added to the system. To help manage information over its entire lifecycle, it includes policy-driven Information Lifecycle Management (ILM) capabilities, including file placement, storage tiering and hierarchical storage management.

Configuration Bill of Materials

|

Item No

|

Qty

|

Type

|

Vendor

|

Model/Name

|

Description

|

|

1

|

10

|

Interface Node

|

IBM

|

2851-SI2

|

SONAS interface node with 144GB of memory

|

|

2

|

16

|

Storage Node

|

IBM

|

2851-SS1

|

SONAS storage node

|

|

3

|

1

|

Management Node

|

IBM

|

2851-SM1

|

SONAS management node

|

|

4

|

16

|

Storage Controller

|

IBM

|

2851-DR1

|

SONAS storage controller

|

|

5

|

16

|

Storage Expansion Enclosure

|

IBM

|

2851-DE1

|

SONAS disk expansion enclosure

|

|

6

|

168

|

10-packs of disks

|

IBM

|

2851 FC#1311

|

10-pack of 15K RPM 600 GB SAS HDD

|

|

7

|

24

|

10-packs of disks

|

IBM

|

2851 FC#1310

|

10-pack of 15K RPM 450 GB SAS HDD. These drives are contained within a single storage pod.

|

|

8

|

1

|

Base rack

|

IBM

|

2851-RXA FC#9004

|

Base rack for interface nodes and management nodes. The rack also comes preconfigured with a KVM/KVM switch, two 96 port Infiniband switches, and two 50 port GbE switches.

|

|

9

|

4

|

Storage expansion rack

|

IBM

|

2851-RXB

|

The storage expansion racks contain storage nodes, storage controllers, and disk expansion shelves, and can contain up to 480 drives. The rack is also preconfigured with two 50 port GbE switches.

|

|

10

|

10

|

Network Adapter

|

IBM

|

2581 FC#1101

|

Dual-port 10Gb Converged Network Adapter (CNA). Each interface node was configured with a single CNA.

|

Server Software

|

OS Name and Version

|

IBM SONAS v1.2

|

|

Other Software

|

none

|

|

Filesystem Software

|

IBM SONAS v1.2

|

Server Tuning

|

Name

|

Value

|

Description

|

|

atime

|

suppress

|

Filesystem option to disable to updating of file access times.

|

Server Tuning Notes

None

Disks and Filesystems

|

Description

|

Number of Disks

|

Usable Size

|

|

600 GB 15K RPM SAS Disk Drives

|

1680

|

843.7 TB

|

|

450 GB 15K RPM SAS Disk Drives

|

240

|

87.0 TB

|

|

Each node had 2 mirrored 300GB drives for operating system use and logging. Additionally the management node has 1 non-mirrored 300GB drive for log files and trace data. None of the SONAS filesystem data or metadata was stored on these disks.

|

55

|

8.2 TB

|

|

Total

|

1975

|

939.0 TB

|

|

Number of Filesystems

|

1

|

|

Total Exported Capacity

|

903.8 TB

|

|

Filesystem Type

|

IBM SONAS Filesystem

|

|

Filesystem Creation Options

|

-b 256K, -j cluster, -R none, --log-striping=yes

|

|

Filesystem Config

|

A single file system was striped in 256 KiB blocks across 208 RAID-5 LUN's presented to the file system.

|

|

Fileset Size

|

49805.3 GB

|

The storage configuration consisted of 8 storage building blocks. Each storage building block comprised a pair of storage nodes directly attached to 2 storage controllers via 2 8 Gbps FC host bus adapters. Each storage node had a path to each storage controller and used multipathing software for failover. Each storage controller plus expansion contained 120 3.5 inch disk drives. In each storage controller plus storage expansion enclosure, the drives were configured in 13 8+P RAID-5 arrays with 3 global spares. The arrays were seen as LUN's on the storage nodes, which then were presented to the file system. The file system data and metadata were striped across all of the arrays from all of the storage controllers.

Network Configuration

|

Item No

|

Network Type

|

Number of Ports Used

|

Notes

|

|

1

|

10 Gigabit Ethernet

|

10

|

Each interface node has a dual port 10 Gb converged network adapter (CNA). The two ports are bonded in active/passive mode and connect directly to a 10 GbE switch.

|

Network Configuration Notes

None

Benchmark Network

The interface nodes and the load generators were connected to the same 10 GbE switch.

Processing Elements

|

Item No

|

Qty

|

Type

|

Description

|

Processing Function

|

|

1

|

20

|

CPU

|

Intel Six-core 2.67GHz Xeon X5650 for each interface node

|

Networking, NFS, CTDB, file system

|

|

2

|

32

|

CPU

|

Intel Quad-core 2.40GHz Xeon E5530 for each storage node

|

file system, storage drivers

|

|

3

|

2

|

CPU

|

Intel Quad-core 2.40GHz Xeon E5530 for the management node

|

Networking, CTDB, file system

|

|

4

|

32

|

CPU

|

Intel Quad-core 2.33GHz Xeon E5410 for each storage contoller

|

RAID

|

Processing Element Notes

Each interface node has 2 physical processors with 6 processing cores and hyperthreading enabled. Each storage node and the management node have 2 physical processors with 4 processing cores and hyperthreading enabled.

Memory

|

Description

|

Size in GB

|

Number of Instances

|

Total GB

|

Nonvolatile

|

|

Interface node system memory

|

144

|

10

|

1440

|

V

|

|

Storage node system memory

|

8

|

16

|

128

|

V

|

|

Management node system memory

|

32

|

1

|

32

|

V

|

|

Storage controller system memory, battery backed up

|

16

|

16

|

256

|

NV

|

|

Storage controller compact flash

|

32

|

16

|

512

|

NV

|

|

Grand Total Memory Gigabytes

|

|

|

2368

|

|

Memory Notes

The storage controllers have main system memory that is used by the onboard operating system and for caching read and write requests associated with the attached RAID arrays. The storage controllers also have compact flash cards that are used to store the onboard operating system files and boot files, and are used to provide stable storage for modified data that has not been written to disk in the case of a power failure.

Stable Storage

The NFS export had the sync flag specified, so NFS writes and commits are not acknowledged as being complete until the data resides in the storage controller (2851-DR1, see diagram) cache. Each storage controller consists of two individual RAID controllers whose caches are mirrored and battery backed up. An incoming write is mirrored to the cache of its partner RAID controller before an acknowledgment is sent back to the storage node. In case of power failure to only one of the two RAID controller controllers, the partner RAID controller takes over until power is restored. In case of a power failure to the storage controller, the contents of the the main memory are kept active by the onboard battery for up to ten minutes, which is enough time to transfer modified data that has not been written to disk to the compact flash card. The compact flash card is partitioned such that there enough capacity to accommodate all of modified data in the RAID controller's cache. Once power is restored the modified data can be written to the disks, and the arrays associated with the RAID controller are placed in write through mode until the battery has regained enough charge to be able to transfer modified data to the compact flash card in the event of another power failure. When a write to an array in write through mode is received by the storage controller, an acknowledgment is not sent back to the storage node until the data has been written to disk.

System Under Test Configuration Notes

The system under test consisted of a single SONAS system comprised of 10 interface nodes and 16 storage nodes. The interface nodes, storage nodes, and a management node are all connected via a high availability, 20 Gbps Infiniband network. The storage nodes are grouped into pairs that connect to storage controllers through 8 Gbps FC host bus adapters. The interface nodes each had a 10 GbE connection to a Force10 10 GbE switch, the same switch used by the load generators.

Other System Notes

Test Environment Bill of Materials

|

Item No

|

Qty

|

Vendor

|

Model/Name

|

Description

|

|

1

|

14

|

IBM

|

System x3250 M3

|

IBM x3250 M3 with 16GB of RAM and RHEL 6

|

|

2

|

1

|

Force10

|

S2410

|

24 port 10 Gigabit ethernet switch

|

Load Generators

|

LG Type Name

|

LG1

|

|

BOM Item #

|

1

|

|

Processor Name

|

Intel Xeon X3450

|

|

Processor Speed

|

2.67 GHz

|

|

Number of Processors (chips)

|

1

|

|

Number of Cores/Chip

|

4

|

|

Memory Size

|

16 GB

|

|

Operating System

|

Red Hat Enterprise Linux 6

|

|

Network Type

|

1 QLogic Corp. 10GbE Converged Network Adapter

|

Load Generator (LG) Configuration

Benchmark Parameters

|

Network Attached Storage Type

|

NFS V3

|

|

Number of Load Generators

|

14

|

|

Number of Processes per LG

|

300

|

|

Biod Max Read Setting

|

2

|

|

Biod Max Write Setting

|

2

|

|

Block Size

|

AUTO

|

Testbed Configuration

|

LG No

|

LG Type

|

Network

|

Target Filesystems

|

Notes

|

|

1..14

|

LG1

|

1

|

/ibm/fs1

|

None

|

Load Generator Configuration Notes

A single filesystem was mounted on each load generator through every interface node.

Uniform Access Rule Compliance

Each load generator had 300 processes equally distributed across the 10 interface nodes. Each process accessed the same, single filesystem.

Other Notes

Config Diagrams

Generated on Tue Feb 22 17:10:31 2011 by SPECsfs2008 HTML Formatter

Copyright © 1997-2008 Standard Performance Evaluation Corporation

First published at SPEC.org on 22-Feb-2011