SPEC SFS®2014_vda Result

Copyright © 2016-2019 Standard Performance Evaluation Corporation

|

SPEC SFS®2014_vda ResultCopyright © 2016-2019 Standard Performance Evaluation Corporation |

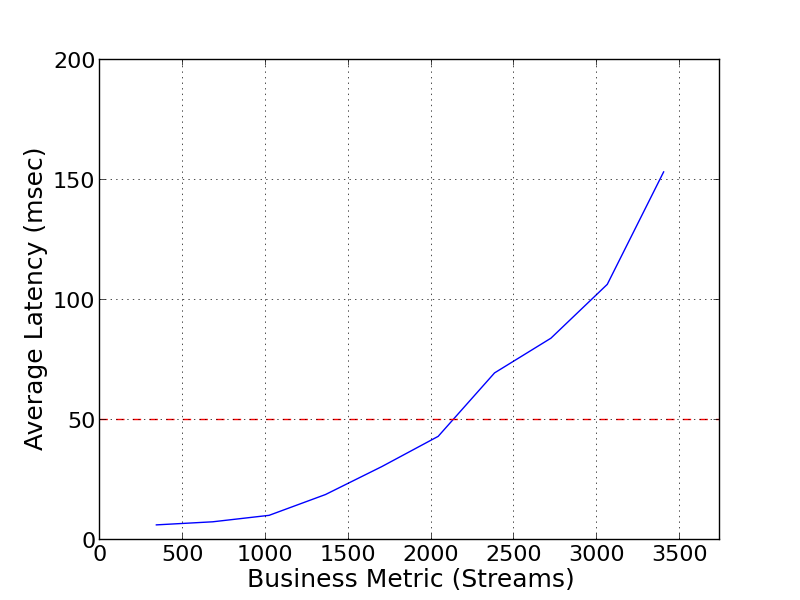

| DDN Storage | SPEC SFS2014_vda = 3400 Streams |

|---|---|

| SFA14KXE with EXAScaler | Overall Response Time = 50.07 msec |

|

|

| SFA14KXE with EXAScaler | |

|---|---|

| Tested by | DDN Storage | Hardware Available | 08 2018 | Software Available | 09 2018 | Date Tested | 09 2018 | License Number | 4722 | Licensee Locations | Santa Clara |

To address the comprehensive needs of High Performance Computing and Analytics environments, the revolutionary DDN SFA14KXE Hybrid Storage Platform is the highest performance architecture in the industry that delivers up to 60GB/s of throughput, extreme IOPs at low latency with industry-leading density in a single 4U appliance. By integrating the latest high-performance technologies from silicon, to interconnect, memory and flash, along with DDN's SFAOS - a real-time storage engine designed for scalable performance, the SFA14KXE outperforms everything on the market. Leveraging over a decade of leadership in the highest end of Big Data, the EXAScaler Lustre Parallel File system solution running on the SFA14KXE provides flexible choices for highest demanding performance of a parallel file system coupled with DDN's deep expertise and history of supporting highly efficient, large-scale deployments.

| Item No | Qty | Type | Vendor | Model/Name | Description |

|---|---|---|---|---|---|

| 1 | 1 | Storage Appliance | DDN Storage | SFA14KXE | 2x Dual Intel(R) Xeon(R) CPU E5-2650 v2 @ 2.60GHz |

| 2 | 17 | Network Adapter | Mellanox | ConnectX- VPI (MCX4121A-XCAT) | Dual-port QSFP, EDR IB (100Gb/s) / 100GigE, PCIe 3.0 x16 8GT/s |

| 3 | 1 | Lustre MDS/MGS Server | Supermicro | SYS-1027R-WC1RT | Dual Intel(R) Xeon(R) CPU E5-2667 v3 @ 3.20GHz, 96GB of memory per Server |

| 4 | 16 | EXAScaler Clients | Supermicro | SYS-1027R-WC1RT | Dual Intel Xeon(R) CPU E5-2650 v2 @ 2.60GHz, 128GB of memory per client |

| 5 | 2 | Switch | Mellanox | SB7700 | 36 port EDR Infiniband switch |

| 6 | 420 | Drives | HGST | HUH721010AL4200 | HGST Ultrastar He10 HUH721010AL4200 - hard drive - 10 TB - SAS 12Gbs |

| 7 | 1 | Enclosure | DDN | EF4024 | External FC connected HDD JBOD enclosure |

| 8 | 4 | Drives | HGST | HUC156030CSS200 | HGST Ultrastar C15K600 HUC156030CSS200 - hard drive - 300 GB - SAS 12Gb/s |

| 9 | 34 | Drives | Toshiba | AL14SEB030N | AL14SEB-N Enterprise Performance Boot HDD |

| 10 | 1 | FC Adapter | QLogic | QLogic QLE2742 | QLogic 32Gb 2-port FC to PCIe Gen3 x8 Adapter |

| Item No | Component | Type | Name and Version | Description |

|---|---|---|---|---|

| 1 | MDS/MGS Server Nodes | Distributed Filesystem | ES 4.0.0, lustre-2.10.4_ddn4 | Distributed file system software that runs on MDS/MGS node. |

| 2 | OSS Server Nodes | Distributed Filesystem | ES 4.0.0, lustre-2.10.4_ddn4 | Distributed file system software that runs on the Virtual OSS VM's. |

| 3 | Client Nodes | Distributed Filesystem | lustre-client-2.10.4_ddn4-1.el7.centos.x86_64 | Distributed file system software that runs on client nodes. |

| 4 | Client Nodes | Operating System | RHEL 7.4 | The Client operating system - 64-bit Red Hat Enterprise Linux version 7.4. |

| 5 | Storage Appliance | Storage Appliance | SFAOS 11.1.0 | SFAOS - real-time storage Operating System designed for scalable performance. |

| EXAScaler common configuration | Parameter Name | Value | Description |

|---|---|---|

| lctl set_param osc.*.max_pages_per_rpc | 16M | maximum number of pages per RPC |

| lctl set_param osc.*.max_dirty_mb | 1024 | maximum amount of outstanding dirty data that wasn't synced by the application |

| lctl set_param llite.*.max_read_ahead_mb | 2048 | maximum amount of cache reserved for readahead cache |

| lctl set_param osc.*.max_rpcs_in_flight | 16 | maximum number of parallel RPC's in flight |

| lctl set_param llite.*.max_read_ahead_per_file_mb | 1024 | max readahead buffer per file |

| lctl set_param osc.*.checksums | 0 | disable data checksums |

please check the following pages for detaild documentation on supported parameters : for osc.* parameters --> https://build.whamcloud.com/job/lustre-manual/lastSuccessfulBuild/artifact/lustre_manual.xhtml#TuningClientIORPCStream for llite.* parameters --> https://build.whamcloud.com/job/lustre-manual/lastSuccessfulBuild/artifact/lustre_manual.xhtml#TuningClientReadahead

| EXAScaler Clients | Parameter Name | Value | Description |

|---|---|---|

| processor.max_cstate | 0 | Defines maximum allowed c-state of CPU. |

| intel_idle.max_cstate | 0 | Defines maximum allowed c-state of CPU in idle mode. |

| idle | poll | set idle mode to polling for maximum performance. |

no special tuning was applied beyond what was mentioned above

None

| Item No | Description | Data Protection | Stable Storage | Qty |

|---|---|---|---|---|

| 1 | 420 HDDs in SFA14KXE | DCR 8+2p | Yes | 420 |

| 2 | 34 mirrored internal 300GB 10K SAS Boot drives | RAID-1 | No | 34 |

| 3 | 2 mirrored 300GB 15K SAS drives MGS | RAID-1 | yes | 2 |

| 4 | 2 mirrored 300GB 15K SAS drives MDT | RAID-1 | yes | 2 |

| Number of Filesystems | 1 | Total Capacity | 2863.4 TiB | Filesystem Type | Lustre |

|---|

A single filesystem with all MDT and OST disks was created, no additional settings where applied.

The SFA14KXE has 8x virtual disks with a 128KB stripe size and 8+2P RAID protection created from 8 DCR storage pools in 51/1 configuration (51 drives per pool with 1 drive worth of spare space).

| Item No | Transport Type | Number of Ports Used | Notes |

|---|---|---|---|

| 1 | 100Gb EDR | 16 | client IB ports |

| 2 | 100Gb EDR | 4 | SFA IB ports |

| 3 | 100Gb EDR | 1 | MDS IB port |

| 4 | 100Gb EDR | 8 | ISL between switches |

| 5 | 16 Gb FC | 2 | direct attached FC to JBOF |

2x 36-port switches in a single InfiniBand fabric, with 8 ISLs between them. Management traffic used IPoIB, data traffic used IB Verbs on the same physical adapter.

| Item No | Switch Name | Switch Type | Total Port Count | Used Port Count | Notes |

|---|---|---|---|---|---|

| 1 | Client/SFA Mellanox SB7700 | 100Gb EDR | 36 | 8 | The default configuration was used on the switch |

| 2 | MDS Mellanox SB7700 | 100Gb EDR | 36 | 27 | The default configuration was used on the switch |

| Item No | Qty | Type | Location | Description | Processing Function |

|---|---|---|---|---|---|

| 1 | 2 | CPU | SFA14KXE | Dual Intel(R) Xeon(R) CPU E5-2650 v2 @ 2.60GHz | Storage unit |

| 2 | 16 | CPU | client nodes | Dual Intel Xeon(R) CPU E5-2650 v2 @ 2.60GHz | Filesystem client, load generator |

| 3 | 1 | CPU | Server nodes | Dual Intel(R) Xeon(R) CPU E5-2667 v3 @ 3.20GHz | EXAScaler Server |

None

| Item No | Qty | Type | Location | Description | Processing Function |

|---|---|---|---|---|---|

| 1 | 4 | CPU | Server nodes | 8 Virtual Cores from SFA Controller | EXAScaler Server |

None

| Description | Size in GiB | Number of Instances | Nonvolatile | Total GiB |

|---|---|---|---|---|

| Memory in SFA System divided for OS and cache | 76 | 2 | NV | 152 |

| Memory in SFA System for VM Memory | 90 | 4 | V | 360 |

| EXAScaler client node system memory | 64 | 16 | V | 1024 |

| EXAScaler MDS/MGS Server node system memory | 96 | 1 | V | 96 | Grand Total Memory Gibibytes | 1632 |

The EXAScaler filesystem utilizes local filesystem cache/memory for its caching mechanism for both clients and OST's. All resources in the system and local filesystem are available for use by Lustre. In the SFA14KXE, some portion of memory is used for the SFAOS Operating system as well as data caching.

| Description | Size in GiB | Number of Instances | Nonvolatile | Total GiB |

|---|---|---|---|---|

| Memory assigned to ach OSS VM within the SFA Controller | 90 | 4 | V | 360 | Grand Total Memory Gibibytes | 360 |

Each of the EXAScaler OSS VM's has 90 GB of memory assigned for OS and caching.

SFAOS with Declustered RAID performs rapid rebuilds, spreading the rebuild process across many drives. SFAOS also supports a range of features which improve uptime for large scale systems including partial rebuilds, enlosure redundancy, dual active-active controllers, online upgrades and more. The SFA14KXE has built-in backup battery power support to allow destaging of cached data to persistent storage in case of a power outage. The system doesn't require further battery power after the destage process completed. All OSS servers and the SFA14KXE are redundantly configured. All 4 Servers have access to all data shared by the SFA14KXE. In the event of loss of a server, that server's data will be failed over automatically to a remaining server with continued production service. Stable writes and commit operations in EXAScaler are not acknowledged until the OSS server receives an acknowledgment of write completion from the underlying storage system (SFA14KXE)

The solution under test used a EXAScaler Cluster optimized for large file, sequential streaming workloads. The Clients served as Filesystem clients as well as load generators for the benchmark. The Benchmark was executed from one of the server nodes. None of the component used to perform the test where patched with Spectre or Meltdown patches (CVE-2017-5754,CVE-2017-5753,CVE-2017-5715).

None

All 16 Clients where used to generate workload against a single Filesystem mountpoint (single namespace) accessible as a local mount on all clients. The EXAScaler Server received the requests by the clients and processed the read or write operation against all connected DCR backed VD's in the SFA14KXE.

EXAScaler is a trademark of DataDirect Network in the U.S. and/or other countries. Intel and Xeon are trademarks of the Intel Corporation in the U.S. and/or other countries. Mellanox is a registered trademark of Mellanox Ltd.

None

Generated on Wed Mar 13 16:26:24 2019 by SpecReport

Copyright © 2016-2019 Standard Performance Evaluation Corporation