|

|

|---|

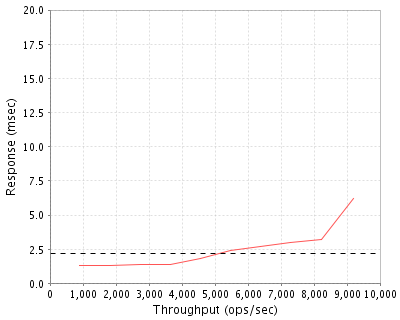

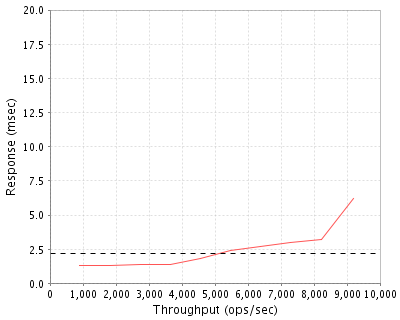

| Apple Inc. | : | Xserve (Early 2009) with Leopard Server |

| SPECsfs2008_nfs.v3 | = | 9189 Ops/Sec (Overall Response Time = 2.18 msec) |

|

|

|---|

| Tested By | Apple Inc. |

|---|---|

| Product Name | Xserve (Early 2009) with Leopard Server |

| Hardware Available | April 2009 |

| Software Available | May 2009 |

| Date Tested | May 2009 |

| SFS License Number | 77 |

| Licensee Locations | Cupertino, CA |

The Apple Xserve system is a server providing network file service, utilizing four Promise fibre channel RAID storage systems.

| Item No | Qty | Type | Vendor | Model/Name | Description |

|---|---|---|---|---|---|

| 1 | 1 | Server | Apple | Z0GM | 2.93 GHz Nehalem Xserve with 48GB (12x4GB) of RAM |

| 2 | 1 | Disk controller | Apple | MB843G/A | 4-port 4Gbps Fibre Channel adapter, firmware 1.3.23.0 |

| 3 | 1 | Network adapter | SmallTree | PEG6 | SmallTree 6-port gigabit ethernet adapter |

| 4 | 4 | Disk controller | Apple | TQ811LL/A | VTrak E-class SAS Fibre channel RAID array with dual controllers and 16 300GB 15K RPM SAS drives, firmware SR2.4.1/AEK |

| 5 | 2 | Fibre Channel Switch | Apple | TL133LL/A | QLogic SANbox 5602 Fibre Channel Switch |

| OS Name and Version | Mac OS X Server 10.5.7 (9J61) |

|---|---|

| Other Software | SmallTree PEG6 ethernet driver (3.2.14) |

| Filesystem Software | N/A |

| Name | Value | Description |

|---|---|---|

| Spotlight indexing disabled | disabled | Set via service com.apple.metadata.mds off |

| Increase the metadata cache maximum size | 280000 | Set via sysctl -w kern.maxvnodes=280000 |

| Increase the metadata cache buffers available | 120000 | Set via sysctl -w kern.maxnbuf=120000 |

| Number of NFS Threads | 256 | Set via serveradmin settings nfs:nbdaemons=256 |

The sysctl tunings above increase the maximum size of the filesystem metadata cache.

| Description | Number of Disks | Usable Size |

|---|---|---|

| This set of 64 disks is divided into 32 2-disk RAID-1 groups that are each exported as a single LUN. All data file systems reside on these disks. | 64 | 8.9 TB |

| This single boot disk is for system use only. | 1 | 1000.0 GB |

| Total | 65 | 9.9 TB |

| Number of Filesystems | 32 |

|---|---|

| Total Exported Capacity | 9.1 TB |

| Filesystem Type | HFS+ Journaled |

| Filesystem Creation Options | Default |

| Filesystem Config | Each filesystem uses one LUN with 64K RAID1 stripes, 2 drives per file system. |

| Fileset Size | 1065.2 GB |

Each filesystem uses one LUN using HFS+ Journaled's default configuration. The hardware RAID deviates from its defaults using ReadCache and WriteBack. All other hardware RAID settings are the default, including using 64KB stripe size. The fibre channel RAID is attached to the Xserve via two SANbox 5602 Fibre Channel switches. The interconnects are attached so that there are two paths to each RAID controller.

| Item No | Network Type | Number of Ports Used | Notes |

|---|---|---|---|

| 1 | Gigabit Ethernet | 2 | Included motherboard ethernet ports |

| 2 | Gigabit Ethernet | 1 | PCI-Express ethernet add-in card. |

All network interfaces were connected to a Linksys SRW2024 switch, each NIC on a separate subnet. The switch is zoned into 3 subnets with VLANs.

All network interfaces were connected to a Linksys SRW2024 switch, each client on a separate subnet. The switch is zoned into 3 subnets with VLANs.

| Item No | Qty | Type | Description | Processing Function |

|---|---|---|---|---|

| 1 | 2 | CPU | Intel(R) Xeon(R) CPU X5570, 2.93 GHz, 2 chips, 8 cores, 4 cores/chip, 8MB L2 cache, 1.066 GHz FSB, DDR3 FB-DIMM memory | TCP/IP, NFS protocol, HFS+ filesystem |

None.

| Description | Size in GB | Number of Instances | Total GB | Nonvolatile |

|---|---|---|---|---|

| Xserve main memory, 1066 MHz DDR3 FB-DIMM | 48 | 1 | 48 | V |

| Hardware RAID system main memory, with 72-hour battery backup | 2 | 4 | 8 | NV |

| Grand Total Memory Gigabytes | 56 |

The mirrored write cache is backed up with a battery unit able to retain the write cache for 72 hours in the event of a power failure.

NFS stable write and commit operations are not acknowledged until after the RAID storage system has acknowledged that the related data stored at least in non-volatile RAM. The storage array has dual storage processor units that work as an active-active failover pair. When one of the storage processors or battery units are off-line, the system turns off the write cache and writes directly to disk before acknowledging any write operations.

Default configuration, except as listed in Server Tunings.

None.

| Item No | Qty | Vendor | Model/Name | Description |

|---|---|---|---|---|

| 1 | 3 | Apple Inc. | Intel Xserve | Xserve with 6GB RAM and Mac OS X Server 10.5.4 |

| LG Type Name | LG1 |

|---|---|

| BOM Item # | 1 |

| Processor Name | Intel Woodcrest processor |

| Processor Speed | 3.0 GHz |

| Number of Processors (chips) | 2 |

| Number of Cores/Chip | 2 |

| Memory Size | 6 GB |

| Operating System | OS X Server 10.5.4 (9E25) |

| Network Type | Built-in NIC |

| Network Attached Storage Type | NFS V3 |

|---|---|

| Number of Load Generators | 3 |

| Number of Processes per LG | 32 |

| Biod Max Read Setting | 2 |

| Biod Max Write Setting | 2 |

| Block Size | AUTO |

| LG No | LG Type | Network | Target Filesystems | Notes |

|---|---|---|---|---|

| 1..1 | LG1 | 1 | /Volumes/r1s20,/Volumes/r1s21,/Volumes/r1s22,/Volumes/r1s23,/Volumes/r1s24,/Volumes/r1s25,/Volumes/r1s26,/Volumes/r1s27,/Volumes/r1s28,/Volumes/r1s29,/Volumes/r1s2a,/Volumes/r1s2b,/Volumes/r1s2c,/Volumes/r1s2d,/Volumes/r1s2e,/Volumes/r1s2f,/Volumes/r1s2g,/Volumes/r1s2h,/Volumes/r1s2i,/Volumes/r1s2j,/Volumes/r1s2k,/Volumes/r1s2l,/Volumes/r1s2m,/Volumes/r1s2n,/Volumes/r1s2o,/Volumes/r1s2p,/Volumes/r1s2q,/Volumes/r1s2r,/Volumes/r1s2s,/Volumes/r1s2t,/Volumes/r1s2u,/Volumes/r1s2v | N/A |

| 2..2 | LG1 | 2 | /Volumes/r1s20,/Volumes/r1s21,/Volumes/r1s22,/Volumes/r1s23,/Volumes/r1s24,/Volumes/r1s25,/Volumes/r1s26,/Volumes/r1s27,/Volumes/r1s28,/Volumes/r1s29,/Volumes/r1s2a,/Volumes/r1s2b,/Volumes/r1s2c,/Volumes/r1s2d,/Volumes/r1s2e,/Volumes/r1s2f,/Volumes/r1s2g,/Volumes/r1s2h,/Volumes/r1s2i,/Volumes/r1s2j,/Volumes/r1s2k,/Volumes/r1s2l,/Volumes/r1s2m,/Volumes/r1s2n,/Volumes/r1s2o,/Volumes/r1s2p,/Volumes/r1s2q,/Volumes/r1s2r,/Volumes/r1s2s,/Volumes/r1s2t,/Volumes/r1s2u,/Volumes/r1s2v | N/A |

| 3..3 | LG1 | 3 | /Volumes/r1s20,/Volumes/r1s21,/Volumes/r1s22,/Volumes/r1s23,/Volumes/r1s24,/Volumes/r1s25,/Volumes/r1s26,/Volumes/r1s27,/Volumes/r1s28,/Volumes/r1s29,/Volumes/r1s2a,/Volumes/r1s2b,/Volumes/r1s2c,/Volumes/r1s2d,/Volumes/r1s2e,/Volumes/r1s2f,/Volumes/r1s2g,/Volumes/r1s2h,/Volumes/r1s2i,/Volumes/r1s2j,/Volumes/r1s2k,/Volumes/r1s2l,/Volumes/r1s2m,/Volumes/r1s2n,/Volumes/r1s2o,/Volumes/r1s2p,/Volumes/r1s2q,/Volumes/r1s2r,/Volumes/r1s2s,/Volumes/r1s2t,/Volumes/r1s2u,/Volumes/r1s2v | N/A |

All filesystems were mounted on all clients, which were connected to the same physical network.

Each client has the same file systems mounted from the server.

(No other report notes)

Generated on Mon Jun 08 15:01:25 2009 by SPECsfs2008 HTML Formatter

Copyright © 1997-2008 Standard Performance Evaluation Corporation

First published at SPEC.org on 08-Jun-2009