SPECsfs2008_nfs.v3 Result

|

Silicon Graphics, Inc.

|

:

|

SGI InfiniteStorage NEXIS 9000

|

|

SPECsfs2008_nfs.v3

|

=

|

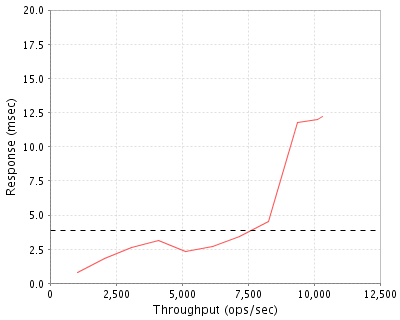

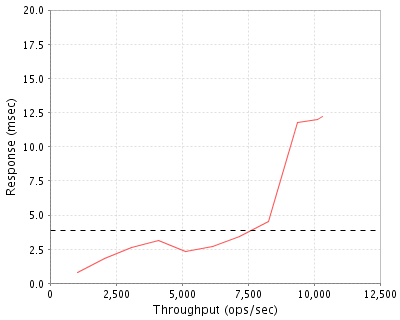

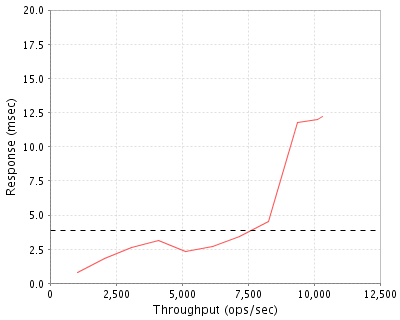

10305 Ops/Sec (Overall Response Time = 3.86 msec)

|

Performance

Throughput

(ops/sec)

|

Response

(msec)

|

|

1027

|

0.8

|

|

2055

|

1.8

|

|

3086

|

2.6

|

|

4093

|

3.1

|

|

5139

|

2.3

|

|

6169

|

2.7

|

|

7196

|

3.4

|

|

8259

|

4.5

|

|

9389

|

11.8

|

|

10117

|

12.0

|

|

10305

|

12.2

|

|

|

Product and Test Information

|

Tested By

|

Silicon Graphics, Inc.

|

|

Product Name

|

SGI InfiniteStorage NEXIS 9000

|

|

Hardware Available

|

April, 2008

|

|

Software Available

|

May, 2008

|

|

Date Tested

|

April, 2008

|

|

SFS License Number

|

4

|

|

Licensee Locations

|

Mountain View, California

|

SGI® InfiniteStorage NEXIS 9000 Network-Attached Storage Appliance (NAS) is designed

for scientific, education, or enterprise teams to maximize bandwidth for demanding and scalable High

Performance Computing (HPC) applications or to consolidate multiple departments on a single NAS platform.

It reduces CPU overhead and supports large Cache memory with an expanded cache architecture. NEXIS 9000

delivers high throughput to applications over InfiniBand (IB), 10GbE and/or GbE networks. A single

NEXIS 9000 can scale storage capacity from 48 drives to 480 drives. NEXIS appliances support NFS v3 & v4,

CIFS, FTP, TCP, SSH as well as iSCSI over IB and a web-based GUI for setup, management and monitoring

storage resources, their utilization and performance.

For the most demanding HPC workloads, SGI® InfiniteStorage NEXIS 9000 NFS/RDMA over InfiniBand (IB)

provides a low cost/high performance transport architecture reducing client CPU overhead, utilizing

high-bandwidth IB fabrics and maximizing throughput.

Configuration Bill of Materials

|

Item No

|

Qty

|

Type

|

Vendor

|

Model/Name

|

Description

|

|

1

|

1

|

Base Server

|

SGI

|

N9K-BASE-220-4

|

NEXIS 9000 BASE HIGH PERF NAS with 4 IS220

|

|

2

|

1

|

Server Upgrade

|

SGI

|

N9K-EXP-220-6

|

NEXIS 9000 Expansion with 6 additional IS220 (10 total)

|

|

3

|

12

|

Memory

|

SGI

|

N-MEM-16GB-MC

|

NEXIS 16GB Mem (4 x 4GB)

|

|

4

|

120

|

Disk

|

SGI

|

N-220-146-I

|

Nexis 146GB 15k rpm SAS Drive for InfiniteStorage 220

|

|

5

|

10

|

Disk & Enclosure

|

SGI

|

N-DE-220-146-I

|

12 bay tray with 12 146GB 15k rpm SAS Drives and RMKit

|

|

6

|

5

|

IB Controller

|

SGI

|

PCIE-IB-HCA410D

|

InfiniBand HCA (X8 PCIe, low profile, memory free) w/ single 4X DDR IB port

|

|

7

|

1

|

Rack

|

SGI

|

NEXIS-RACK-TALL

|

InfiniteStorage NEXIS Rack for all NAS Bundles

|

|

8

|

4

|

Power

|

SGI

|

LSX-PDU-220-Z

|

Power Distribution Unit for US, CANADA, JAPAN, KOREA, TAIWAN

|

|

9

|

1

|

Software

|

SGI

|

SR5-NFS-RDMA-4

|

NFS RDMA License

|

|

10

|

1

|

Software

|

SGI

|

SC5-APPMGR-4.2

|

NAS software for bundles includes Appliance Manager software, ProPack 5 and SLES 10 media kits

|

|

11

|

1

|

Software

|

SGI

|

SR5-PP-SV1-4-3Y

|

SGI ProPack Server for 1-4 sockets 3 yrs upgrade protection.

|

|

12

|

1

|

Software

|

SGI

|

SR5-SLS-SV1-32-3Y

|

SMP server 1-32 sockets with 3 yr of upg protection; for IPF or x86_64 systems.

|

|

13

|

10

|

Software

|

SGI

|

SC5-ISSM-WE-2.70

|

ISSM Workgroup Edition for SGI InfiniteStorage 220 - for Linux

|

Server Software

|

OS Name and Version

|

SUSE Linux Enterprise Server 10 (ia64) SP1 2.6.16.54-0.2.5-default

|

|

Other Software

|

SGI InfiniteStorage Software Platform, version 1.2.1; SGI ProPack 5SP5 for Linux; ISSM Workgroup Edition for SGI InfiniteStorage 220 - for Linux

|

|

Filesystem Software

|

XFS

|

Server Tuning

|

Name

|

Value

|

Description

|

|

/proc/irq/71/smp_affinity

|

8

|

Override factory IRQ binding for 2nd IB interface; bind to CPU 3 instead of CPU 5.

|

|

vm.dirty_expire_centisecs

|

100

|

Data that has been in memory for longer than this interval will be written out next time a pdflush daemon wakes up. Expressed in 100ths of a second.

|

Server Tuning Notes

System defaults used except as noted. "Capacity IOPS" selected at system initialization.

Disks and Filesystems

|

Description

|

Number of Disks

|

Usable Size

|

|

Mirrored boot disk for system use only

|

2

|

300.0 GB

|

|

24 disks per IS220 RAID unit, bound into 4 4+1 RAID5 LUNs + 4 spare, all assembled into a single file system.

|

240

|

22.9 TB

|

|

Total

|

242

|

23.1 TB

|

|

Number of Filesystems

|

1

|

|

Total Exported Capacity

|

23404 GB

|

|

Filesystem Type

|

XFS

|

|

Filesystem Creation Options

|

Default

|

|

Filesystem Config

|

AppMan default for "Capacity IOPS" consisting of 10 stripe sets built from LUNs on 4 different controllers. The stripe sets are concatenated into an XVM logical volume. The file system uses all available capacity on the resulting logical volume.

|

|

Fileset Size

|

1216.2 GB

|

Network Configuration

|

Item No

|

Network Type

|

Number of Ports Used

|

Notes

|

|

1

|

InfiniBand

|

4

|

Default

|

Network Configuration Notes

Two IB ports connect to each blade enclosure in the client cluster. The top and bottom available ports were used on the integral IB Plane 1 switch in each blade enclosure.

Benchmark Network

Cluster IB plane 1 used for NFS on IP over IB. Physical connections from four Nexis 9000 IB ports

to the upper and lower available IB switch ports on the right side of each Altix ICE blade enclosure, four connections

total. One Nexis 9000 IB port and one IB plane 1 port on each Altix ICE blade enclosure were unused. Cluster IB

plane 0 used for interprocess communication. Gigabit Ethernet used only for cluster administration.

Processing Elements

|

Item No

|

Qty

|

Type

|

Description

|

Processing Function

|

|

1

|

8

|

CPU

|

Intel® Itanium® processor 9030, 1.6 GHz, 533 MHz FSB, 8 chips, 16 cores, 2 cores/chip, DDR2 FB-DIMM memory

|

TCP/IP, NFS protocol, XFS file system, XVM volume manager

|

Processing Element Notes

None.

Memory

|

Description

|

Size in GB

|

Number of Instances

|

Total GB

|

Nonvolatile

|

|

Server main memory, DDR2 FB-DIMM, PC2 3200R

|

16

|

12

|

192

|

V

|

|

HW RAID memory w/ 72 hour battery backup.

|

2

|

10

|

20

|

NV

|

|

Grand Total Memory Gigabytes

|

|

|

212

|

|

Memory Notes

The RAID unit mirrored write cache is backed up with a battery unit able to retain the write cache for 72 hours in the event of a power failure.

Stable Storage

NFS stable write and commit operations are not acknowledged until after the RAID storage system has acknowledged

that the related data stored at least in mirrored non-volatile RAM. The storage array has dual storage processor units that

work as an active-active failover pair. When one of the storage processors or battery units are off-line, the

system turns off the write cache and writes directly to disk before acknowledging any write operations.

System Under Test Configuration Notes

Other System Notes

Test Environment Bill of Materials

|

Item No

|

Qty

|

Vendor

|

Model/Name

|

Description

|

|

1

|

1

|

SGI

|

LCB-SYSTEM

|

Altix ICE system

|

|

2

|

2

|

SGI

|

LCB-BASE-CB-4X-2

|

Altix ICE blade enclosure with two InfiniBand

switches (dual plane), redundant power and fans

|

|

3

|

1

|

SGI

|

LCB-TORUS

|

3D torus or hypercube topology

|

|

4

|

32

|

SGI

|

LCB-BLADE-CB

|

Altix ICE compute blade with 2-sockets, which

support processors with a 1333MHz FSB, and 8

DIMM slots.

|

|

5

|

64

|

SGI

|

LCB-CPU-5355-CB

|

2.66GHz/8M/1333MHz Quad-core Xeon Processor

|

|

6

|

128

|

SGI

|

LCB-MEM-FB-4G-CB

|

4GB Fully Buffered DIMM set (2 x 2GB, 667MHz)

|

|

7

|

8

|

SGI

|

IB-CABLE-01M-Z

|

InfiniBand 4x Cable - 1M

|

|

8

|

4

|

SGI

|

IB-CABLE-02M-Z

|

InfiniBand 4x Cable - 2M

|

|

9

|

1

|

SGI

|

LCB-DVD-RW-INT

|

Internal DVD RW drive for Altix ICE

|

|

10

|

6

|

SGI

|

RACK-PWR-CB-1PH

|

Single phase PDU (US)

|

|

11

|

1

|

SGI

|

LCB-CONSOLE

|

Console with keyboard, video and mouse

|

|

12

|

1

|

SGI

|

LCB-LOGIN-NODE

|

Login node

|

|

13

|

1

|

SGI

|

LCB-SYS6015

|

Altix ICE service node (1U, RPS, RAID)

|

|

14

|

2

|

SGI

|

LCB-MEM-FB-2G-SN

|

2GB Fully Buffered DIMM set (2 x 1GB,

667MHz) for Altix ICE system admin controller

and service nodes

|

|

15

|

2

|

SGI

|

LCB-SASDRV-73G

|

73GB 15K RPM SAS HDD for Altix ICE

|

|

16

|

1

|

SGI

|

LCB-CPU-5150-SN

|

Additional 2.66GHz Dual-core Xeon Processor

|

Load Generators

|

LG Type Name

|

LG1

|

|

BOM Item #

|

4

|

|

Processor Name

|

LCB-CPU-5355-CB

|

|

Processor Speed

|

2.66 GHz

|

|

Number of Processors (chips)

|

2

|

|

Number of Cores/Chip

|

4

|

|

Memory Size

|

16 GB

|

|

Operating System

|

SLES10 SP1

|

|

Network Type

|

Built-in NICs, 2 each 4xDDR InfiniBand, 1 each Gigabit Ethernet

|

Load Generator (LG) Configuration

Benchmark Parameters

|

Network Attached Storage Type

|

NFS V3

|

|

Number of Load Generators

|

32

|

|

Number of Processes per LG

|

8

|

|

Biod Max Read Setting

|

2

|

|

Biod Max Write Setting

|

2

|

|

Block Size

|

1024

|

Testbed Configuration

|

LG No

|

LG Type

|

Network

|

Target Filesystems

|

Notes

|

|

1..4

|

LG1

|

IB0

|

FS1

|

4 cluster nodes accessing storage on the 10.149.0.0/18 IB0 subnet.

|

|

5..8

|

LG1

|

IB1

|

FS1

|

4 cluster nodes accessing storage on the 10.149.64.0/18 IB1 subnet.

|

|

9..12

|

LG1

|

IB2

|

FS1

|

4 cluster nodes accessing storage on the 10.149.128.0/18 IB2 subnet.

|

|

13..16

|

LG1

|

IB3

|

FS1

|

4 cluster nodes accessing storage on the 10.149.192.0/18 IB3 subnet.

|

Load Generator Configuration Notes

One file system mounted by all active clients. Compute nodes in blade enclosure 0 were active as load generators. Compute nodes in blade enclosure 1 were unused.

Uniform Access Rule Compliance

Every client has the same file system mounted from the server.

Other Notes

Config Diagrams

Generated on Fri May 16 12:45:41 2008 by SPECsfs2008 HTML Formatter

Copyright © 1997-2008 Standard Performance Evaluation Corporation

First published at SPEC.org on 21-May-2008