SPECsfs2008_nfs.v3 Result

|

NSPLab(SM) Performed Benchmarking

|

:

|

SPECsfs2008 Reference Platform (NFSv3)

|

|

SPECsfs2008_nfs.v3

|

=

|

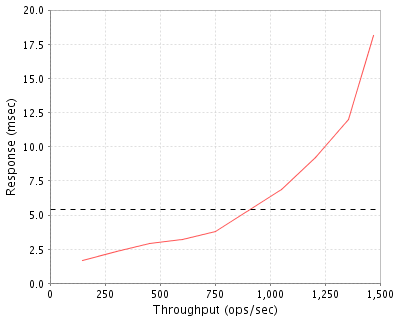

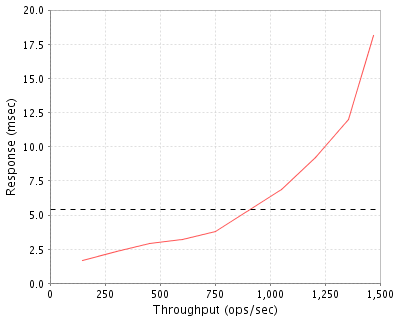

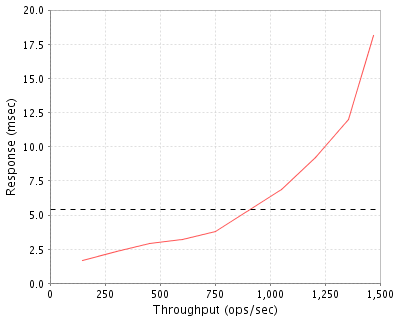

1470 Ops/Sec (Overall Response Time = 5.40 msec)

|

Performance

Throughput

(ops/sec)

|

Response

(msec)

|

|

148

|

1.7

|

|

300

|

2.3

|

|

450

|

2.9

|

|

601

|

3.2

|

|

750

|

3.8

|

|

904

|

5.3

|

|

1054

|

6.9

|

|

1207

|

9.2

|

|

1356

|

12.0

|

|

1470

|

18.1

|

|

|

Product and Test Information

|

Tested By

|

NSPLab(SM) Performed Benchmarking

|

|

Product Name

|

SPECsfs2008 Reference Platform (NFSv3)

|

|

Hardware Available

|

January 2003

|

|

Software Available

|

November 2007

|

|

Date Tested

|

December 2007

|

|

SFS License Number

|

2851

|

|

Licensee Locations

|

El Paso, TX

USA

|

The PowerEdge 1600SC Server was setup as a small workgroup file server using AX100 and AX150 arrays to provide RAID 5 storage. The storage was directly connected to the server.

Configuration Bill of Materials

|

Item No

|

Qty

|

Type

|

Vendor

|

Model/Name

|

Description

|

|

1

|

1

|

Server

|

Generic

|

PowerEdge 1600SC

|

PowerEdge 1600SC server with single Xeon 2.4GHz processor, running Red Hat Enterprise Linux 5.1 software.

|

|

2

|

2

|

HBA

|

Generic

|

LightPulse

|

Generic LightPulse 4Gb/s LPe1105-n dual-channel HBA

|

|

3

|

1

|

Disk Controller

|

Generic

|

AX100

|

This array includes 12 146GB/7200 rpm SATA drives.

|

|

4

|

1

|

Disk Controller

|

Generic

|

AX150

|

This array includes 12 230GB/7200 rpm SATA drives.

|

|

5

|

1

|

Ethernet Switch

|

Generic

|

PowerConnect 2616

|

16 port Gigabit Ethernet Switch

|

Server Software

|

OS Name and Version

|

Linux RHEL 5.1

|

|

Other Software

|

Linux RHEL 5.1 kernel 2.6.16

|

|

Filesystem Software

|

EXT3

|

Server Tuning

|

Name

|

Value

|

Description

|

|

net.core.rmem_max

|

1048576

|

The maximum receive socket buffer size

|

|

net.core.wmem_max

|

1048576

|

The maximum send socket buffer size

|

|

net.core.wmem_default

|

1048576

|

The default send socket buffer size

|

|

net.core.rmem_default

|

1048576

|

The default receive socket buffer size

|

|

vm.min_free_kbytes

|

40960

|

Force the VM to keep a minimum number of kilobytes free

|

|

vm.dirty_background_ratio

|

1

|

The percentage of total memory at which the pdflush background writeback daemon will start writing out dirty data.

|

|

vm.dirty_expire_centisecs

|

1

|

Data that has been in memory for longer than this interval will be written out next time a pdflush daemon wakes up. Expressed in 100ths of a second.

|

|

vm.dirty_writeback_centisecs

|

99

|

The interval between wakeups of the pdflush daemons. Expressed in 100th of a second.

|

|

vm.dirty_ratio

|

10

|

The percentage of total memory at which a process that is generating disk writes will start writing out dirty data.

|

Server Tuning Notes

None

Disks and Filesystems

|

Description

|

Number of Disks

|

Usable Size

|

|

AX100 array configuration includes 12 146GB disks configured as (10+1)RAID-5 bound as a single LUN on which a 1.4TB EXT3 file system is built. One disk is used as a Hot Spare.

|

12

|

1.4 TB

|

|

AX150 array configuration includes 12 230GB disks configured as (10+1)RAID-5 bound as a single LUN on which a 2.3TB EXT3 file system is built. One disk is used as a Hot Spare.

|

12

|

2.2 TB

|

|

This is an internal SCSI disk drive used for the Linux OS for system use.

|

1

|

146.0 GB

|

|

Total

|

25

|

3.8 TB

|

|

Number of Filesystems

|

2

|

|

Total Exported Capacity

|

3.3 TB

|

|

Filesystem Type

|

EXT3

|

|

Filesystem Creation Options

|

EXT3

|

|

Filesystem Config

|

A filesystem is created on a LUN on the AX100 and another on a LUN on the AX150. The filesystems are fs1 and fs2.

|

|

Fileset Size

|

172.0 GB

|

Each of the arrays, AX100 and AX150 contained 12 disks that were configured as (10+1) RAID-5 groups with 64KB RAID stripe size. The 12th disk was configured as hot spare.

Network Configuration

|

Item No

|

Network Type

|

Number of Ports Used

|

Notes

|

|

1

|

Gigabit Ethernet

|

1

|

Used Gigabit Ethernet port on motherboard

|

Network Configuration Notes

All network interfaces were connected to a the switch.

Benchmark Network

The PowerEdge 1600SC server single on-board network interface was connected to the switch. Each of the LG single on-board network interface was connected to the switch.

Processing Elements

|

Item No

|

Qty

|

Type

|

Description

|

Processing Function

|

|

1

|

1

|

CPU

|

Intel Xeon 2.4 Ghz, 1 chip, 1 core per chip, 512KB of (I+D) L2 cache.

|

NFS protocol, EXT3 filesystem

|

|

2

|

4

|

CPU

|

1.2 Ghz Intel Celeron, 2 per AX100/AX150 disk array

|

RAID processing

|

Processing Element Notes

None

Memory

|

Description

|

Size in GB

|

Number of Instances

|

Total GB

|

Nonvolatile

|

|

NAS server main memory

|

4

|

1

|

4

|

V

|

|

Storage Processor main memory

|

1

|

2

|

2

|

NV

|

|

Grand Total Memory Gigabytes

|

|

|

6

|

|

Memory Notes

Each storage array has dual storage processor units that work as an active-active failover pair. The mirrored write cache is backed up with a battery unit capable of saving the write cache to disk in the event of a power failure. In the event of a storage array failure, the second storage processor unit is capable of saving all state that was managed by the first (and vise versa), even with a simultaneous power failure. When one of the storage processors or battery units are off-line, the system turns off the write cache and writes directly to disk before acknowledging any write operations. The battery unit can support the retention of data for 2 minutes which is sufficient to write all necessary data twice. Storage processor A could have written 99% of its memory to disk and then fail. In that case storage processor B has enough battery to store its copy of A's data as well as its own.

Stable Storage

2 NFS file systems were used. Each RAID group was bound in a single LUN on each array. Each file system was configured on one LUN: fs1 on AX150 and fs2 on AX100. Each array had 2 Fibre Channel connections to the server although only one connection was enabled. In this configuration, NFS stable write and commit operations are not acknowledged until after the storage array has acknowledged that the related data has been stored in stable storage (i.e. NVRAM or disk).

System Under Test Configuration Notes

See above for the tuning params.

Other System Notes

A minimal amount of Linux tuning was performed to get the benchmark to run successfully. The goal was to measure performance as close to out of the box (default tunings) as possible.

Test Environment Bill of Materials

|

Item No

|

Qty

|

Vendor

|

Model/Name

|

Description

|

|

1

|

4

|

Generic

|

430SC

|

Workstation with single Dual Core Xeon 2.8 GHz with 1 GB RAM

|

Load Generators

|

LG Type Name

|

LG1-4

|

|

BOM Item #

|

1

|

|

Processor Name

|

Xeon Dual Core

|

|

Processor Speed

|

2.8 GHz

|

|

Number of Processors (chips)

|

1

|

|

Number of Cores/Chip

|

2

|

|

Memory Size

|

1 GB

|

|

Operating System

|

Linux RHES 4.2

|

|

Network Type

|

BCM95751

|

Load Generator (LG) Configuration

Benchmark Parameters

|

Network Attached Storage Type

|

NFS V3

|

|

Number of Load Generators

|

4

|

|

Number of Processes per LG

|

8

|

|

Biod Max Read Setting

|

2

|

|

Biod Max Write Setting

|

2

|

|

Block Size

|

AUTO

|

Testbed Configuration

|

LG No

|

LG Type

|

Network

|

Target Filesystems

|

Notes

|

|

1..4

|

LG1

|

1

|

/fs1,/fs2

|

N/A

|

Load Generator Configuration Notes

Both filesystems were mounted on all clients, which were

connected to the same physical and logical network.

Uniform Access Rule Compliance

Each client has the both file systems mounted from each of the 2 storage arrays: AX150 and AX100.

Other Notes

Config Diagrams

Generated on Tue Mar 18 23:24:56 2008 by SPECsfs2008 HTML Formatter

Copyright © 1997-2008 Standard Performance Evaluation Corporation

First published at SPEC.org on 18-Mar-2008